| Default Text (translatable): | 'You need to set a Site|in order for data replication to occur.|Would you like to set it now?' |

| Occurrence 1: | When no site has been set yet. |

| Occurrence 2: | The INI file containing the site information is not found. |

| Default Text (translatable): | 'You need to set a directory|for the INCOMING data files.|Would you like to set it now?' |

| Occurrence 1: | When no incoming directory for the log files has been set yet. |

| Default Text (translatable): | 'Logging path not configured.|Would you like to select a path now?' |

| Occurrence 1: | When no path to place the log files in has been set yet. |

| Occurrence 2: | The INI file containing the site information is not found. |

| Default Text (translatable): | 'You need to set a Parent Site|in order for data replication to occur.|Would you like to set it now?' |

| Occurrence 1: | When no parent site has been set yet. |

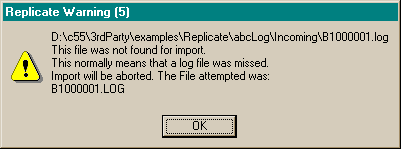

| Default Text (translatable): | FullFileName & '|This file was not found for import.|This normally means that a log file was missed.|Import will be aborted. The File attempted was:' & LogFileName. |

| Occurrence 1: | Replicate is attempting to import a logfile, which it is not ready for, i.e. the sequence of text files has been broken. For example: The file requiring to be imported is B1000004.log, but we have only imported up to B1000002.log. This means that file B1000003.log has been missed. The file will be automatically be requested from the respective site. |

| Occurrence 2: | Replicate is attempting to import a logfile, and the log file has been given exclusive access to another program (i.e. with a text editor, or the like). Quit the other program, so that Replicate can use the file. |

| Default Text (translatable: | 'If you are doing conditional replication (i.e. only distributing subsets of data)|then you need to specify the highest site ID for the data in this subset.|Would you like to set it?' |

| Occurrence 1: | When no parent site has been set yet and the 'Never' button was not pressed previously. |

| Default Text (translatable): | 'Error: The Site name in the file name|is different to the Site name in the header of the file:' |

| Occurrence 1: | Occurs if the log file has been renamed. This is after basic check to ensure that the site name in the header matches the site name in the filename. |

| Default Text (translatable): | 'You need to set a directory|for the OUTGOING data files.|Would you like to set it now?' |

| Occurrence 1: | When no outgoing directory has been set yet. |

| Occurrence 2: | The INI file containing the site information is not found. |

| Default Text (translatable): | 'Some of the Email settings|have not been set-up yet.|Would you like to set them now?' |

| Occurrence 1: | When some/all of the Email settings have not been set-up and you are using the csLogConnectionManager class. |

| Default Text (translatable): | 'A log file for a new site has been received. Would you like to auto add this site?' |

| Occurrence 1: | When a log file arrives in the incoming directory, and the log file's site is not registered in our site file as a related site. |

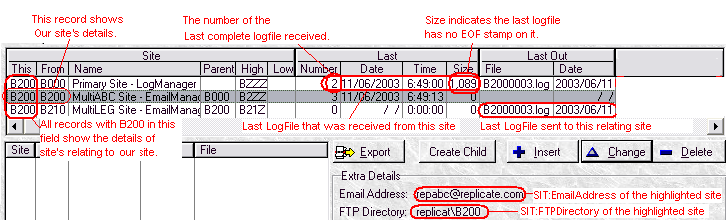

| Default Text (translatable): | 'You have more than Incoming log file to be imported|but one prior to the last does not have an <EOF> stamp.|File: ' & ImportFileName |

| Occurrence 1: | When a log file arrives with a higher number to the previous

received log file, where the previous one was not received in completion

(i.e. with an <End of File> tag at the end). This normally occurs when a LogManager is setup incorrectly. It sends the first logfile to the wrong place. The place is then corrected and in the meantime the logfile number has incremented. Thus the next logfile is sent to the correct place, but the LogManager thinks that it has already sent the first one off correctly. In the meantime, only the 2nd logfile is received at the relating site's LogManager. This is when the warning occurs and request for the missing logfile is immediately generated. This message should only occur until the next ProcessLogFiles is issued from the sending site. You can suppress this warning if you like, but it can be useful if there is a problem. If you persist in getting this warning, it means that the request from the recipient site is not getting through to the sending site. Make sure that your site info (at the recipient site) is setup correctly so that it can send the request file to the sending site to request the missing logfile(s) |

| Occurrence 2: | If the logfile that has the EOF stamp is received is smaller

than a previous file of the same name, then this will occur. This is

normally as a result of manual intervention with logfiles (i.e. manually

editting logfiles or deleting logfiles from the logpath). To solve this - you need to reset the incoming counters at the logmanager that is attempting to import the logfile. The record to reset the counters, is where ThisSite = the SiteID of that logmanger and where FromSite = the first 4 alphanumeric characters of the filename. |

| Default Text (translatable): | 'The Required path for replicating files was not found.|Would you like to create the following directory now?' |

| Occurrence 1: | If you have setup a relating site's InDir (in the site file) but the directory does not exist, you will be prompted with this message. |

| Occurrence 2: | If you are replicating logfiles, but the incoming directory or the outgoing directory is not located, then you can create it. |

| Default Text (translatable): | 'Some of the FTP settings are not setup yet.|Would you like to set these up now?' |

| Occurrence 1: | Your FTP settings have not been completely setup. If you don't require FTP for this site, then click the 'Never' button |

| Default Text (translatable): | 'If you are doing conditional replication (i.e. only distributing subsets of data)|then you can specify the lowest site ID for the data in this subset|(if it is not the same as this site''s Site ID).|Would you like to set it?' |

| Occurrence 1: | You have setup your LogManager to allow for a SiteLow that is not the same as your Site ID. If it is, click the 'Never' button. |

| Default Text (translatable): | 'The Site value for the low range is higher than the SiteID itself.|You need to set one that is lower. Redo Now?' |

| Occurrence 1: | Incorrect SiteLow setting. |

| Default Text (translatable): | 'The Site value for the high range is lower than the SiteID itself.|You need to set one that is higher. Redo Now?' |

| Occurrence 1: | Incorrect SiteHi setting. |

| Default Text (translatable): | 'Logging Error : Site Name not configured' |

| Occurrence 1: | There was an error configuring the site name, and an attempt is made to log a file change. |

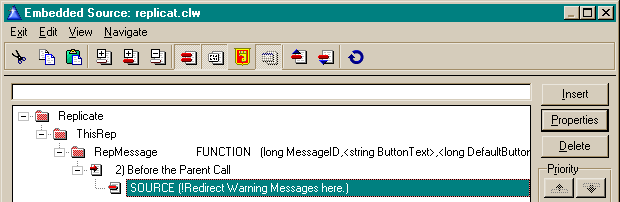

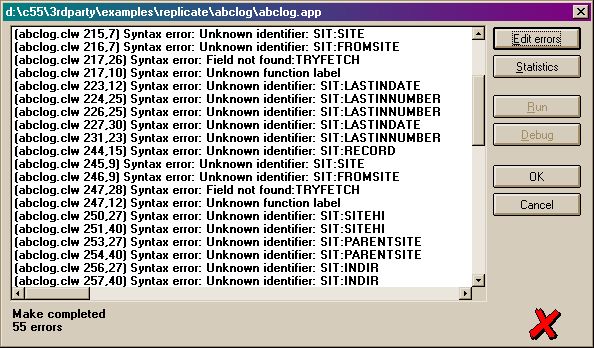

| Default Text (translatable: | 'This Array is not Registered so import will not be updated (for this field)' |

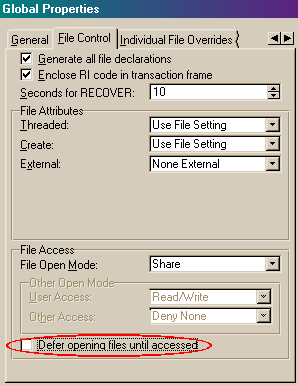

| Occurrence 1: | Array not registered and a Array change cannot be logged. This could occur in a Multi-DLL setup where the 'Generate all files' checkbox in the Global Properties is not checked. |

| Default Text (translatable): | 'This File in your database is not registered:|' |

| Occurrence 1: | File not registered and a File change cannot be logged. This could occur in a Multi-DLL setup where the 'Generate all files' checkbox in the Global Properties is not checked. |

| Default Text (translatable): | 'This File was not available for exporting.|The exporting cannot continue until this file has been found:|' |

| Occurrence 1: | The logfile specified is not available for exporting. This generally occurs when a logfile is deleted/harddrive is corrupt. We've implemented a couple of possible solutions for this occurance. Check out the Global Extension template on implementation of MissingLogFiles. |

| Default Text (translatable): | 'The Checksum for this file is invalid, which means that the file has got corrupted.| Do you want to abort importing this file for now and request the file again?' |

| Occurrence 1: | The CRC of the logfile received is incorrect. This means that the logfile received is not the logfile sent. The logfile will not be imported - and a request for the logfile will be automatically generated. |

| Default Text (translatable): | 'Error Creating Log File: ' <LogFileName> <error> |

| Occurrence 1 (critical): | LogFile cannot be created. This normally means that you don't have create/write rights to the directory that you are creating. |

| Default Text (translatable): | 'Error Opening Log File: '<LogFileName> <error> |

| Occurrence 1 (critical): | LogFile cannot be opened. The included error will give details as to why this error has occured. |

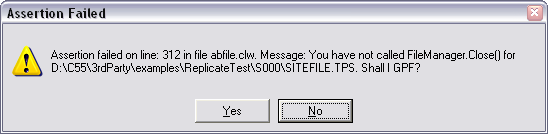

| Default Text (translatable): | 'Error Closing Log File: ' <LogFileName> <error> |

| Occurrence 1 (critical): | LogFile cannot be closed. The included error will give details as to why this error has occured. |

| Default Text (translatable): | 'Error Trying to insert a log into the logfile:' <LogFileName>

<error> 'Unable to Lock:' <LogFileName> |

| Occurrence 1 (critical): | Trying to insert a FileChange log into the log file. |

| Solution: | This is normally the result of too many applications trying to write to the log file simultaneously. You need to increase the number of times your application must attempt to write to the logfile (a setting in the global extension template on the Advanced tab). This will slow data file writing, but will ensure that replication is accurate. |

| Default Text (translatable): | 'Error registering a file:' <FileName> <error> |

| Occurrence 1 (critical): | A table in the dictionary cannot be registered in the internal Replicate file queue. This means that file changes cannot be logged. |

| Default Text (translatable): | 'Error updating (add/put/delete) a record in file:' <FileName> <error> |

| Occurrence 1 (critical): | There is an error importing a file change into one of the tables. Replicate aborts import on the following errorcodes: 5, 8 , 37, 75, 90. If you want to override a critical error you can do this in the CriticalErrorInImport method. In this way you can determine for your backend what fileerror is safe to continue importing, and what's not safe. We would rather halt the importing, then get you to assess whether it's OK to continue importing and make the necessary tweak to your LogManager, rather than continue importing illusively when the problem could rightly be highly critical. |

| Solution: | 1. The most common problem related to this is that

you are running more than one LogManager on that particular site. You must only run one

LogManager for each site. 2. Make sure that you've checked the 'Generate all file declarations' in your LogManager application. 3. Check that you're not excluding the <FileName> file from replication (either in the dct or in the Global Extension template). |

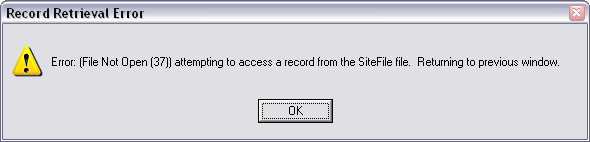

| Default Text (translatable): | 'Error opening a database file:' <FileName> <error> |

| Occurrence 1 (critical): | A table in the dictionary cannot be opened. |

| Default Text (translatable): | 'Corrupt/incomplete log file:' <FileName> <error> |

| Occurrence 1 (critical): | A logfile has been chopped off - importing this logfile (and subsequent ones) cannot be completed. |

| Default Text (translatable): | 'Error opening request file:' <FileName> <error> |

| Occurrence 1 (critical): | A Message/request file cannot be opened. |

| CapeSoft Support | |

|---|---|

| Telephone | +27 87 828 0123 |

| Fax | +27 21 715 2535 |

| Post | PO Box 511, Plumstead, 7801, Cape Town, South Africa |