Important Tips - Please read

| Are you a first time User? |

It is critical that you read the Suggested

Reading sections as adding Replicate to your application

incorrectly can cause significant damage to your data if set up

incorrectly! |

| Upgrading from 1.99beta or earlier? |

Check out the FAQs on Upgrading

from a previous version |

| What version of Clarion are you using? |

If you are upgrading your Clarion5.5 application to Clarion6,

then you must read the FAQ on upgrading

your LogManager from prior to beta 16

If you have C5.5F or earlier, you must read the FAQ:Compile

Errors section |

| Are you going to use Email or FTP to

transport your logfiles? |

The transportation layer does not form part of Replicate. You

will need a transportation engine to handle the transporting of

logfiles. In the examples that ship with Replicate we have used NetTalk.

|

Introduction

Replicate provides an automatic, driver independent,

file-version independent, mechanism for replicating the data in two or

more databases.

Basically, Replicate logs your changes, adds and deletes and then using a

transport manager of your choice, exports the changes to the other sites,

where the changes, adds and deletes are imported to that data set. This

all done completely automatically without your users having to do

anything!!

Replicate supports both offline and online environments.

Features

- Tracks all adds/edits and deletes-

Embedded into a level below the FileDriver, Replicate tracks all adds,

changes and deletes, whether hand-coded, called with the ABC methods,

or generated by templates. No extra calls are required to log

adds/edits and deletes.

- Field-level Replication- only logs fields that have changed (when

updating a record). (For MSSQL: only supported from 9057 and up -

because of a Clarion file-driver deficiency - see Useful

Tip 1.3 on how to implement this)

- Supports Logout/Rollback/Commit

- Subset propagation - Instead of

replicating all the data to all children, Replicate can be configured

to just send a subset of data pertaining to that child (or range of

children).

- Variable synchronization timing - You

can set-up the Replicate to synchronize as often as you would like,

and you can do manual synchronizing with the push of a button.

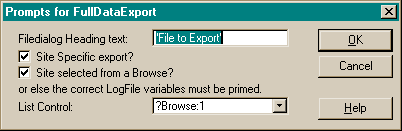

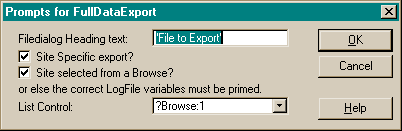

- Complete data export - A complete data

set can be exported and imported to a new site.

- Table & Field level Suppression -

Allows suppressing of specific tables and/or fields that are not

required (like calculated fields and temporary tables).

- Completely Bi-directional Replication -

Replicate will propagate all the file changes you make upwards and

downwards, i.e. to parent-sites and child-sites.

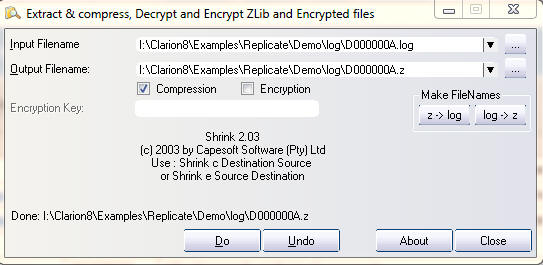

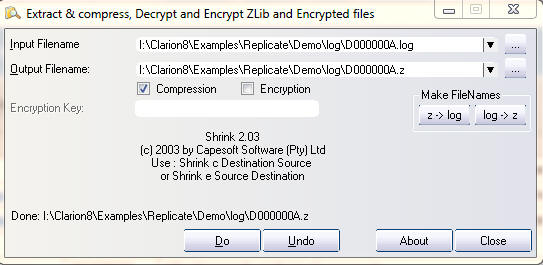

- Optional Internal LogFile compression - using Capesoft's zLib

compression classes.

- Supports Legacy, ABC and hand-coded procedures

and applications.

- Multiple Version support - only imports those fields that are valid

for the file version at the site. Not driver dependant, so you can

have a SQL file structure and a TPS file structure happily replicating

to each other.

Note: It's important to remember that

Replicate is much more than a synchronization tool. While it can be used

as a synchronizer on a LAN environment, you will find that the majority of

the time Replicate works asynchronously. You set it up to Process the

logfiles (i.e. import and export) as often as you want, and Replicate

happily works behind the scenes - without your users really noticing.

Two good reasons why

not to use Replicate!

You have a backend (like SQL) or

database that is accessed by programs other than your own, that will not

be Replicate enabled.

Unlike most SQL replication systems Replicate works on a 'Push' system -

i.e. the program must log the changes ('push' them out). This means that

if a program is making changes without logging them, Replicate will not

know about those changes. In this case you should use a SQL backend that

has the replication features, and use the SQL backend's replication.

Unfortunately this restricts you to use the same SQL backend (and in some

cases this is version dependent as well) for all your relating databases.

You have a database where running values must be kept

up to date live (like a bank or other such running totals).

Because Replicate consists of 2 or more different data sets, these data

sets will naturally only contain changes (made in between updates at the

other data sets) at the point of synchronization. While you can perform

updates as often as necessary, this still remains an aspect of

consideration. Live systems have their downsides - requiring a constant

link and continuous bandwidth usage are the main considerations.

Another Helpful

Hint:

If you have a single site that you're wanting to backup, then there are

better tools available to do this (although this can be done with

Replicate, the tool is not primarily focused on this feature). You could

look at Double-Take

http://www.nsisoftware.com

which some folks have had success with (I have not used this, so it's not

my recommendation) as an example.

Help

- I'm a new user, what must I read first?

Note: Replicate is unlike the

other CapeSoft products, in that it won't take a couple of minutes to

add into your application. You need to read the documentation thoroughly

and DO the tutorials in order to obtain a good understanding of the

structural concepts. DO NOT be tempted to skim read the docs and rush

into implementing it into your application!!!

- You need to understand some of the Replicate concepts, so it's

imperative that you read the Introduction

for Programmers first.

- You then need to make

the necessary changes to your dictionary, and have a reasonable

understanding of why you are making these changes (as these will

affect the way you design/change your application).

At this point you MUST compile the examples and setup the

tree structure that is provided. Without this grounding you will

waste a tremendous amount of time trying to get Replicate working

properly.

- You will now be ready to add

Replicate to your Application.

- You need to implement a mechanism for transporting

the Logfiles and do the JumpStart

tutorial to get 2 sites Replicating

- NB: Read The Rules

- RECOMMENDED - read through the Useful

tips section.

- For those who want to delve a bit deeper, you can get into the Replicate

classes to employ the full power of Replicate. If you are still

unsure of some of the Replicate concepts, it will be a good idea to

read the complete and (almost) unabridged Replicate

for Dummies by James Fortune.

- If you cannot get your LogManager to Replicate, then use the trouble-shooting

guide and the FAQs

to help find the problem.

Introduction for Programmers

What is a Site

A Site is simply a set of data that one or more applications may use. For

example:

We have 3 sites:

- A set of data at Head Office: You may have a sales database, with

orders, etc. There are 4 (in house) operators who operate the sales

via phone and all 4 operators have access to the same database on a

Fileserver. This is the first site.

- You have a roving salesman with a complete database on his laptop.

This is the second site.

- You have a remote branch with another 2 operators who use the same

database on a Fileserver. This is the third site.

Although there may be 7 operators running the program, there are only 3

sets of data - thus 3 sites.

The Site Tree Structure

Replicate requires a parent-child site structure.

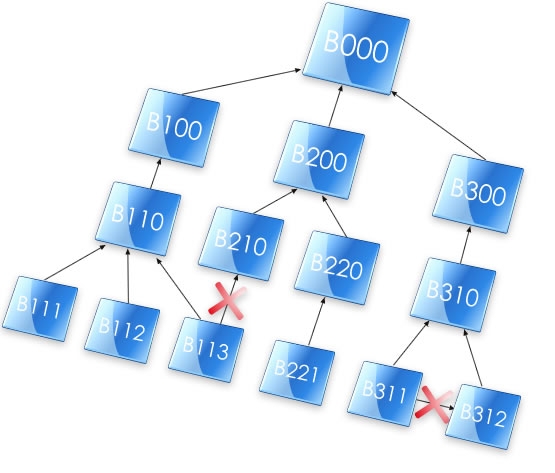

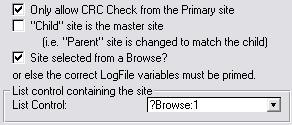

In the tree below, we could visualize B000 as the Head Office, B100, B200

and B300 as regional offices, B110, B210, B220 and B310 as branches, while

the bottom layer are roving salesmen (for their allocated branches) with

laptops. Thus there are 14 Sites in the diagram below. Branch B110 has 3

roving salesmen - B111, B112 and B113. It is important to note that B113

cannot go and synchronize his data with B210, he must always relate to

B110. Similarly, 2 roving salesmen (B311 and B312) cannot synchronize to

each other while in the field - they must return to branch B310 to

synchronize their data.

Note:

Note: the arrows reflect the child-parent

relationships, not the direction of replication. Replication is completely

bi-directional.

The crosses indicate illegal relationships. It is illegal for a site to

have more than one parent (as discussed above).

It

is important to remember that a Site is one data set, but could be made

up of many users/machines.

Selecting the site-tree structure best for you:

In a LAN setup you may choose to have a single database (i.e. one central

site) - or to have a site on each user's PC. The advantages of the second

option are:

- Faster data access - for reports and browses as the data is read

directly from the machine.

- If the "File Server" goes down, the operators can continue entering

data as they are not dependent on the FileServer to operate.

The advantage of the first option is:

- Totals (stock counts, etc.) are accurate at real time - as there is

only one dataset.

- Replication is quicker (if the Site-tree gets to more than 3 tiers,

you can have quite a lot of logfiles moving around up and down the

site-tree)

Depending on which is the most important priority for you will depend on

which is the best model in your situation.

The Primary Site

Only one site can have no parent (the apex of the tree). This is called

the

Primary Site. In the above instance, B000

is the Primary Site.

The Site

Identifier and Site Numbering Tips

Each site is identified by a Site identifier - a string(4). This site

field is used to associate site-related records with their relevant sites.

It is important to design a site map before implementing Replicate so as

to number your sites correctly. For example, if we number the descendants

of B100 in the range B100 to B1ZZ (i.e. there are 36*36=1296 possible

descendants of child B100), then it becomes easy to start thinking about

only

distributing

the file changes that pertain to the B100 family instead of the

entire log file. It's also a good idea to begin with a number that allows

upward expansion (i.e. it allows B000 to have a parent - A000, if so

desired).

Note: The Site identifier must be a unique

alphanumeric case-insensitive character of length 4 for each site.

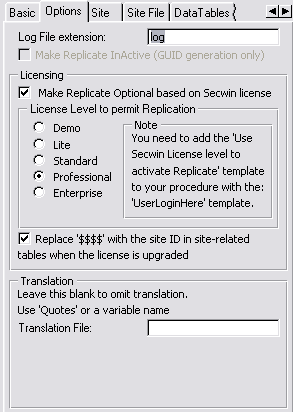

The log files..

These are the files that Replicate logs the file updates to. The log files

are named as follows: xxxxnnnn.log (the file extension is .log by default,

but can be changed in the Global Extension Template). xxxx is the Site

identifier, and nnnn is a hex number (starting at 0001 and going through

to FFFF) and then a alphanumeric decoded string from the 65536th file. The

log file structure is defined in the Replicateobject (so you don't need to

add this to your dictionary). (

The

log file in more detail)

Logfile

Subsets

In most cases it will be a bit cumbersome to distribute all the changes to

all the children. A complete dataset will be at the Primary Site. From

there down, the datasets can be reduced, as only the data that pertains to

a site and its descendants needs to be kept.

Distributing logfile subsets drastically reduces the amount of traffic on

the network.

As a rule, all changes are distributed upwards (to the parent site) (this

is to ensure that a complete dataset is maintained at the Primary Site),

but subset distribution may occur downwards.

The LogManager

Program

The LogManager program is a separate program (from your application) which

runs in the background. This program handles the importing and exporting

of the log files from and to the relating sites' LogManagers.

There must be one and only one LogManager program

running per site.

What you need

to change in your Dictionary

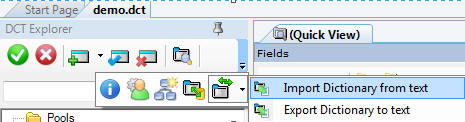

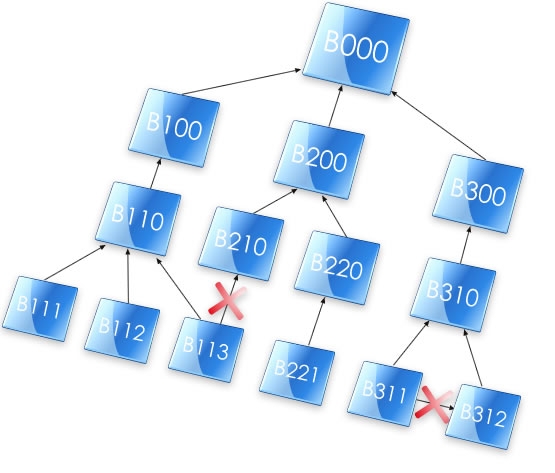

You can implement all the

following changes that you are required to by using the Bulk

Dictionary Editor, which ships with Replicate. The

Bulk Dictionary Editor is located in the

Accessories menu in the Clarion IDE. You need to export your

dictionary to a text file (force the extension of the output to a TXD

file), then run the utility on the TXD file (NOTE: Do not add the SiteFile

and LogHistory tables), and then re-import your TXD file to a new

dictionary.

- Open your dictionary and

import the clarion\accessory\libsrc\win\ReplicateTables.txd into your

dictionary.

You should have 3 tables imported into your dictionary: The SiteFile,

LogHistory and ReplicateSuppression tables.

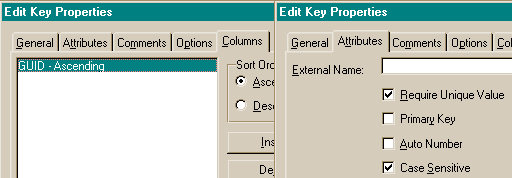

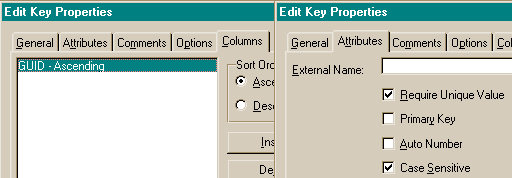

- You need to add a GUID field (and a corresponding GUID key) to each

of your replicated tables (You can use the Bulk

Dictionary Editor to do this).

- The GUID field must be a string(16) (NOT

a cstring) and must be labeled GUID. The GUID field is only for

Replicate's use and is meaningless to the programmer.

- The GUID key (label is irrelevant) must have the following

properties:

- You need to add a Site

Field to each of the tables whose records

are site dependent (You can use the Bulk

Dictionary Editor to do this). The

site field should be a string4 and should be labeled in

accordance with the Site

field descriptor in your Global Template (i.e. you must have a

common label for all your site fields - like Site - although it

doesn't strictly need to be called Site, you'll save yourself some

manual labour by sticking to the default). These tables now become

Site-related tables.

All site dependant file records will be distributed upwards (to the

parent), those that are distributed downwards will depend on the value

of the Site field in the file. If the value of the Site field is <

SiteHi and > SiteLow (or Site ID if there is no SiteLow setting)

then the record will be replicated to that relating site - otherwise

it will be omitted from the logfile for that site.

It is important to think carefully about which

tables require a Site field and which ones don't.

For Example: Suppose you have 3 tables - a products table, a customers

table and an invoices table. You have 3 sites: HO, Branch1 and

Branch2. Lets say that you don't need Branch1 to see Branch2's

customers (and visa versa). Each Invoice is related to a customer.

These two tables are Site related tables and require a Site field.

You want one product list which must be viewed at all Sites (which has

stock codes, pricing, etc.). This is a 'Global' table and all changes

to this table (prices, product descriptions, etc.) must be replicated

throughout all the sites. The Products table MUST NOT have a Site

field.

NB: Site-related tables will still require a

site field, even if you are not doing subset Replication.

Still not sure? Check out FAQ2.3

for more details.

- For each table that you added the Site Field to (Step 2 above), you

must add its Site field to any existing unique keys, although it must

not be added to the GUIDKey. (You can use the Bulk

Dictionary Editor to do this)

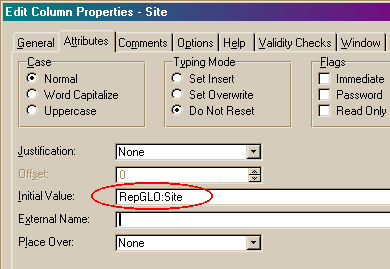

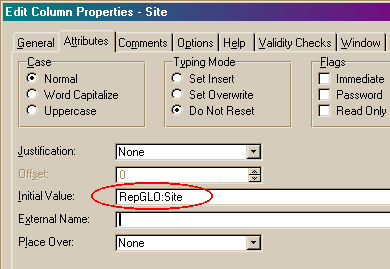

- You will need to set

the Initial Value of the Site field for Site-related tables with

auto-incrementing keys that you added the SiteField to (in step 3).

You can do this as follows (RepGLO:Site is a Global variable that the

Bulk Dictionary Editor adds to your

application):

Note: For site-related tables that have

a Many-To-One relationship with another site-related table, then you

almost certainly want to use the parent table's site value, and not

the RepGLO:Site variable as the site field must inherit it's value

from the related table.

Adding Replicate to

your Application

If you are still unsure of some of the Replicate

concepts, it will be a good idea to read the complete and (almost)

unabridged

Replicate for

Dummies by James Fortune.

If this is the first time that you are using

Replicate, then you MUST first compile the examples

and setup the tree structure that is provided before adding Replicate to

your application. Without this grounding you will waste a tremendous

amount of time trying to get Replicate to work properly.

- You need to make the necessary changes

to your dictionary.

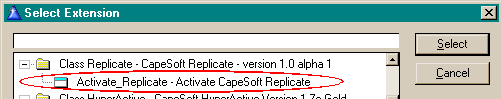

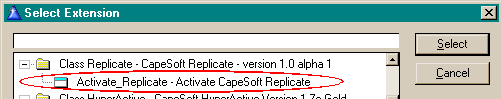

- Add the Global Extension Template to your application:

- Load your application in the Clarion IDE.

- Click the Global button

- Click the Extensions button

- Click the Insert button

- Select the Activate_Replicate

template from the Select Extension list

that appears.

- Click the Select button and the

Replicate Global Extension's prompts appear on the right-hand

portion of the Extension and Control

Templates window.

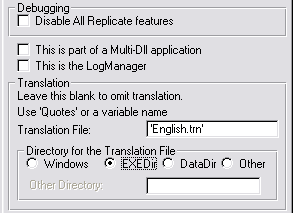

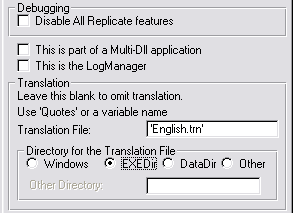

- On the Basic tab, make sure the

Disable All Replicate features and

the This is the LogManager

checkboxes are both clear.

Leave the first 3 checkboxes unchecked if this is a StandAlone

EXE, otherwise checkout the What

to do in a Multi-DLL setup section in the Useful Tips in

this document. You can leave the Translation File entry blank if

you don't require translation (otherwise check the

Implement Translation section in the Useful Tips in this

doc).

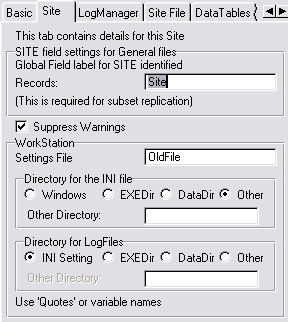

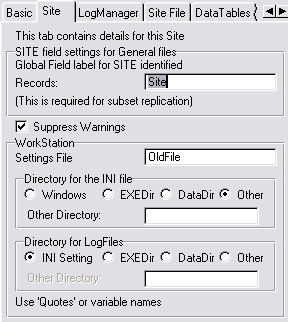

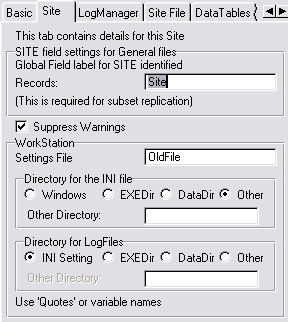

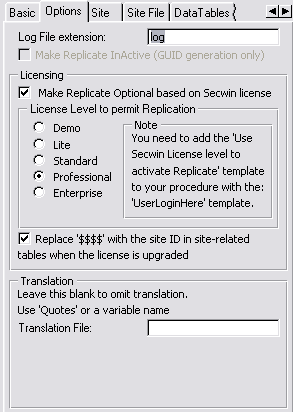

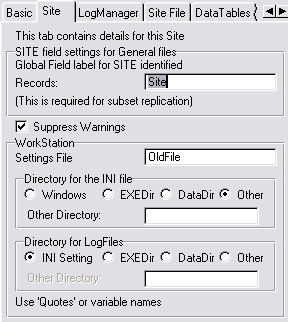

In the Global Field label for SITE identified

records, enter a name which will be used to recognize the

Site field in each Site related file. This is not necessary if you

are not going to be doing subset

replication.

You can check the Suppress Warnings

checkbox if you don't want Replicate to warn the user when it

creates directories and has other minor errors.

Enter the Settings File name (an INI

file name) that will contain the Site settings. You can use quotes

for a fixed name or use a variable.

Select a Directory for the INI File

from the options provided.

The Directory for LogFiles option group

allows you to specify where the log files must be written. If the

same site data is used across a network (by multiple users), then

it is imperative that this path indicator is pointing to the same

place for all users of the site data.

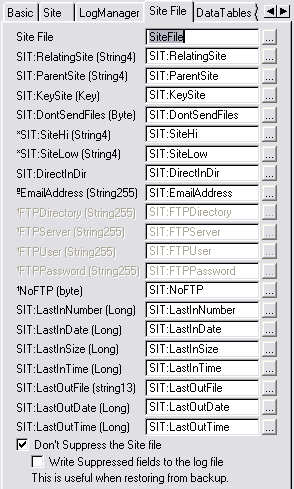

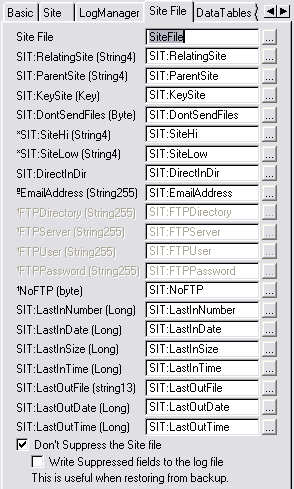

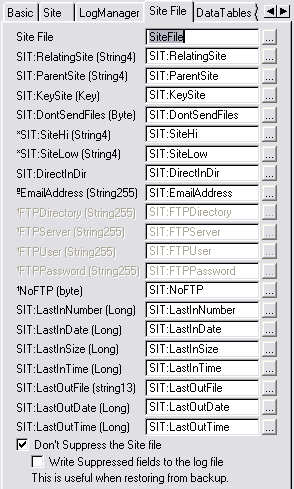

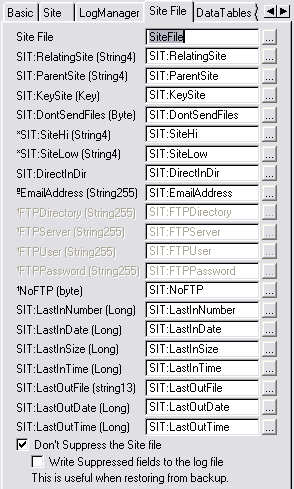

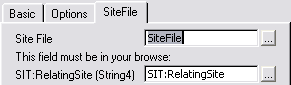

- On the Site Files tab, you need

to enter the details of your Site file.

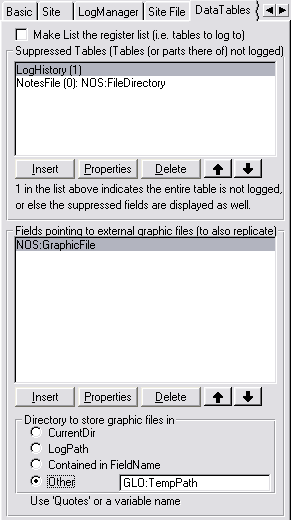

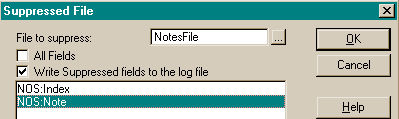

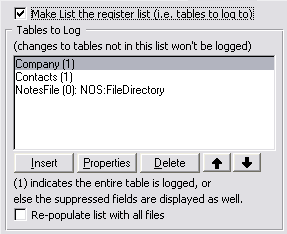

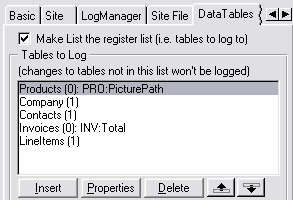

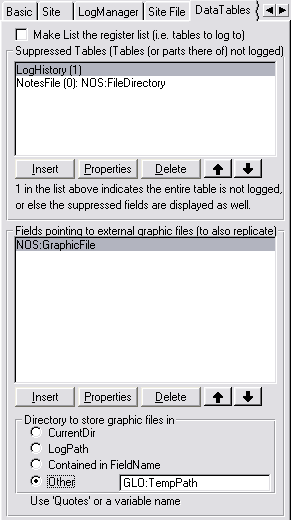

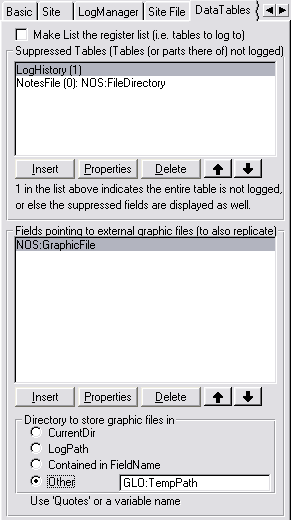

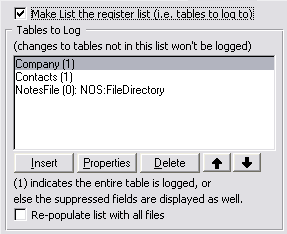

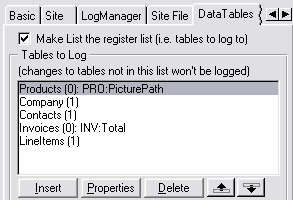

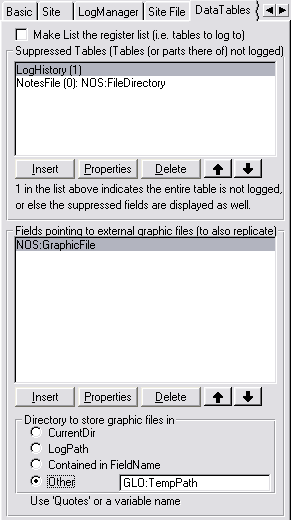

- On the DataTables tab, you can enter the files

in your dictionary that you do not want to be replicated.

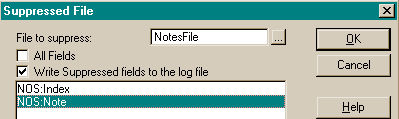

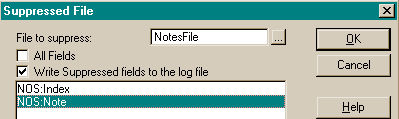

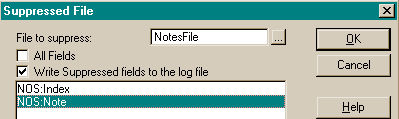

Click the Insert button. The Suppressed File window will appear.

Enter the name of the File to suppress.

You can use the file select button (...) provided.

If you only want to suppress certain fields in this file (and not

the whole file), then clear the All Fields

check box.

You can add suppressed fields to the list by clicking the Insertbutton.

Alternatively you can add the RepSuppress option in the user

options of each file in your dictionary. This means that you only

have to do this once (if you have many applications).

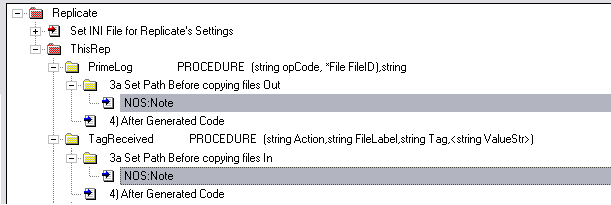

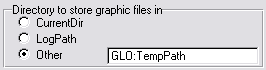

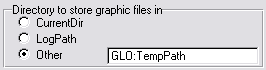

If you have fields pointing to external files (like a graphics

file), then you can insert these fields into the second list - and

the external files will be exported with the logfiles (after this

field change). If you don't have a central location for the

external files, you can set the Directory to 'Other' and then put

a variable in the field provided. You will find an Embed point

(Global Embeds) in the PrimeLog method for each field (pointing to

an external file) where you can set the path where Replicate will

copy the external file from.

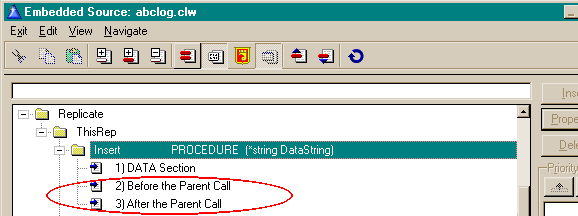

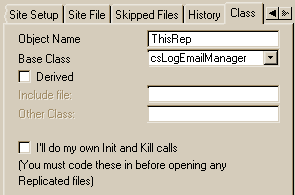

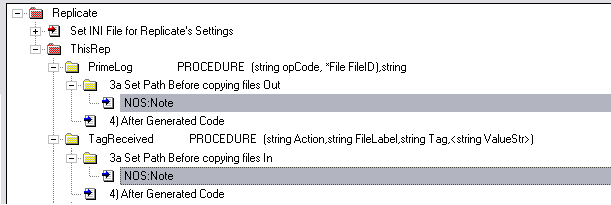

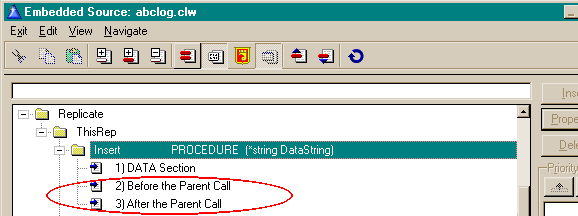

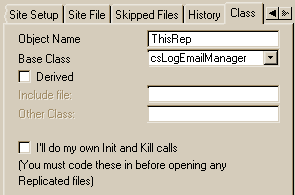

- On the Class tab, you can set

the Object Name ('ThisRep' by

default).

If you have derived your own Log class, then check the Derived checkbox and enter the required

details (the Include file and the Other Class name). Leave the I'll

do my own Init and Kill calls checkbox clear at this

point.

Creating the LogManager program

Introducing the LogManager

Now that your application is logging the file changes, you need to create

the LogManager to manage the importing and exporting of the log files and

transport these log files to the different sites. The type of

transportation manager will depend on the connection between the sites.

Please read this section carefully, as it will save a lot of heartache in

getting going with Replicate.

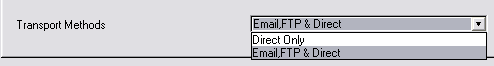

Generally there will be 3 different

types of connections:

- The direct transportation (where the logfiles are copied directly to

the relating LogManagers)

- The email transportation system (where the logfiles are emailed to

relating Sites)

- The FTP transportation system (where the logfiles are FTPed to a

common FTP site). Your own transport mechanism

You can program your LogManager to use all 3 transport mechanisms. In this

case, you can set the mechanism for each site (depending on their setup

and requirements) in the settings in the SiteFile. Also a site can receive

logfiles using all 3 transport mechanisms. In other words, if I have 4

sites - a head office, a branch, a roving salesman and a mirror-site (for

my backup); the head office can use FTP for the branch, email for the

roving salesman and direct copy for the mirror-site.

Let's look at each of these 3 in detail:

1. The direct transportation method

requires that the LogManagers can copy the logfiles directly to each

other's incoming directory. The incoming directory is a directory which is

treated as an Inbox. The LogManager will basically check it's InBox and

process any incoming logfiles that are found there and delete them once

they have been processed. The incoming directory is a sub-directory inside

the LogPath.

Important: You will need to ensure that each site

knows where the other site's incoming directory is (more about this

later).

A couple of things to watch out for when using this method are:

- Make sure your drive mapping is correct and that you are pointing to

the correct directory.

- Make sure that you have access rights to be able to copy the files

to the incoming directories.

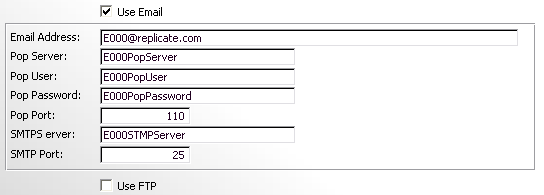

2. The Email Transportation method

requires that each site has a valid Email address. This Email address will

be the incoming mailbox and the LogManager will retrieve mail from that

inbox and extract the attached logfiles into the incoming directory

(checkout the Direct Transportation method for details) from where it will

process the logfiles. Relating LogManagers must send files to this

LogManager at this email address.

Important: You will need to ensure that each site

knows the relating sites' email address (more about this later).

A couple of things to watch out for when using this method:

- You must use a dedicated mailbox.

- You'll need a mail solution (like NetTalk - which uses SMTP) and

you'll need to ensure that your LogManager has the correct SMTP

settings at each site.

3. The FTP Transportation method

requires that each site has access to the FTP server. There are a number

of different ways of setting up the FTP transportation depending on your

setup. So it's important to work out which setup you will require at the

beginning:

FTP1. Will you have one FTP server that your sites will be using to store

the data?

FTP2. Will all your sites be using FTP for transport (i.e. will none use

Direct or Email)?

If you answered Yes for 1 then you have the simplest FTP setup. For

multiple FTP servers, this requires setting up (preferably) a default FTP

details for the site that you will use most and then overriding the

default for those sites that will use a different FTP server.

Important: You will need to ensure that each site

knows the relating sites' FTP directory (more about this later).

A couple of things to watch out for when using this method:

- The FTP server must be running all the time.

- You need to have the correct rights setup on the FTP server so that

the logfiles can be written, copied and deleted from the respective

folders. The Logmanager needs to be able to create it's incoming

directory (if it is non-existent) as well.

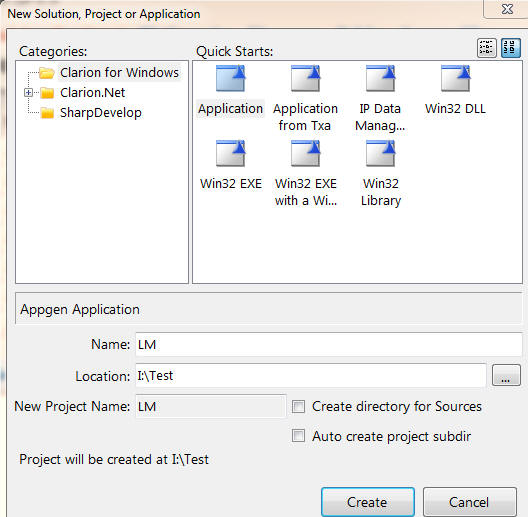

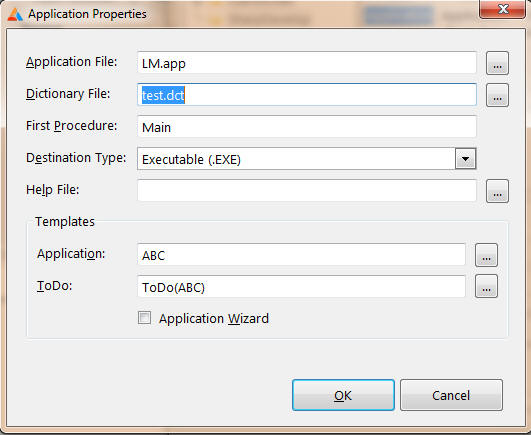

Creating the

LogManager

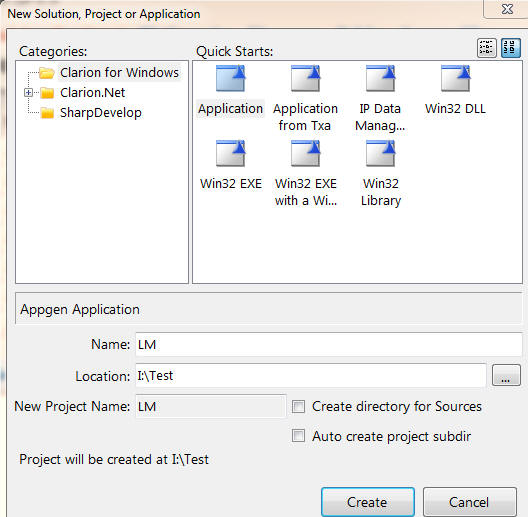

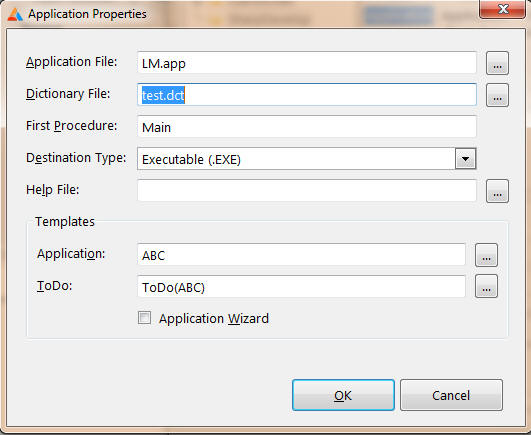

- Create a new completely blank application (using your dictionary)

based on the template set of your choice (ABC or legacy).

NB - uncheck the Application Wizard checkbox:

Don't be tempted to create a LogManager based application at this

point, as it won't work.

Note: If your application is written in

legacy, then it's a good idea to make a legacy application for the

LogManager - or do the conversion from legacy to ABC before

implementing Replicate - otherwise you my find that you are using

non-compliant ABC file names which will show up if you create an ABC

LogManager.

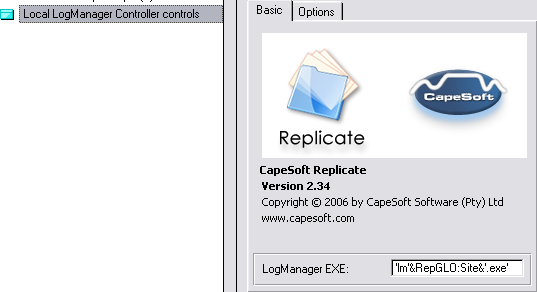

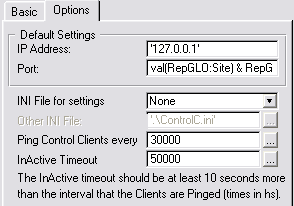

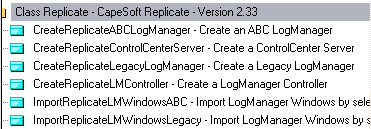

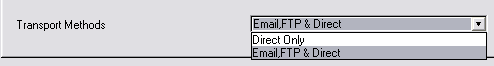

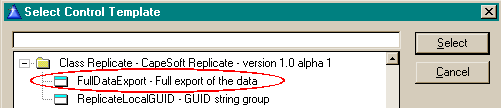

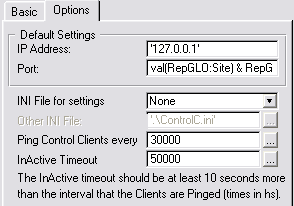

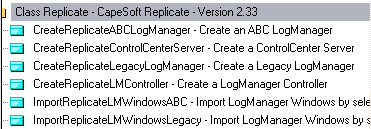

- Run one of the CreateReplicateLogManager

Template Utilities (legacy or ABC depending on which you choose)

- select whether you want all transport methods, or Direct Only. You

also need to decide whether you would like to implement the

ControlCenterClient technology into your LogManager at this stage

(check the Useful

tips: Controlling the LogManager Externally for more details).

- You need to change some things on your Replicate Global Extension

Template (Activate CapeSoft Replicate).

- Click the Global button.

- Click the Extensions button on the Global Propertieswindow.

- Highlight the Activate CapeSoft Replicate

template in the template list on the left.

- Change to the Site tab and enter the Records and the WorkStation

details to correlate to those

entered in your application.

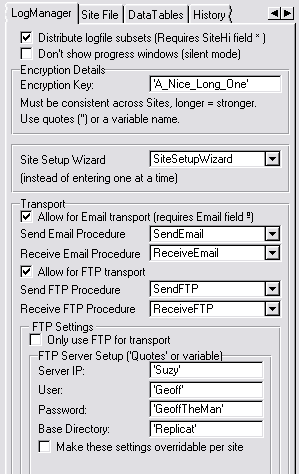

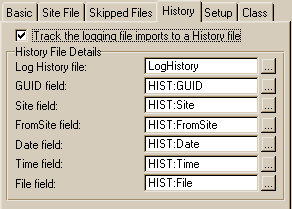

- Change to the LogManager tab and enter

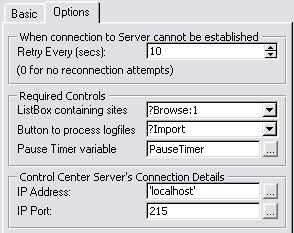

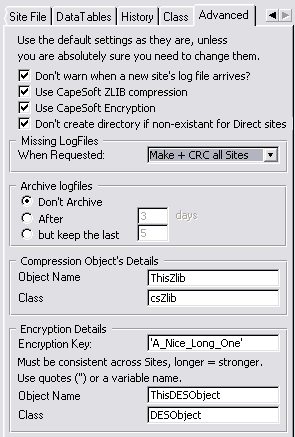

the LogManager Setup Options:

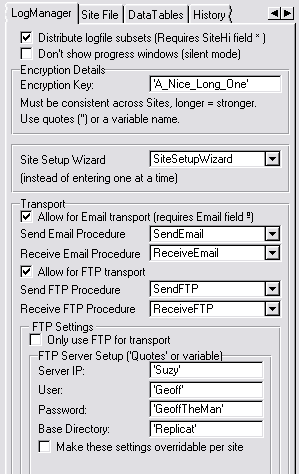

- If you would like to distribute subsets

of the logfiles, then you can check the Distribute

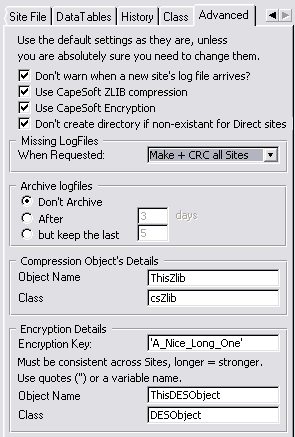

logfile subsets check box on the code template.

- Enter an Encryption Key, a nice long

for a strong encryption.

- If you are basing your LogManager on the csLogConnectionManager

class, then you will be able to select your transport method.

Check the relevant checkboxes (see the Global

Extension Template section for more details).

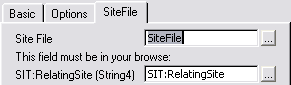

- Change to the Site File tab.

- Check that all the Site file's (file, fields and keys) details

are present and correct. If they are not there, then you don't

have a SiteFile in your dictionary (Check out

What you need to change in your Dictionary).

- Change to the DataTables tab and insert

the files as they were

entered in your application. Just do the suppressed files for

the moment, if you have external files to transport, do that later .

For more details on some of the other options, go to the

Global Extension Template section of this manual.

-

- At present the LogManager is setup to synchronize every 10

minutes, but you may require it to synchronize more or less

regularly.

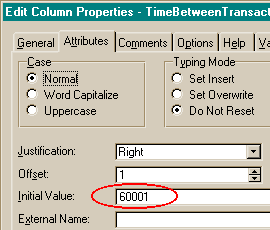

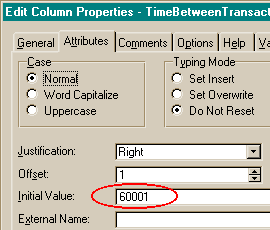

- Click the Global and then Data

buttons.

- Double-click the RepGlo:TimeBetweenTransactions

variable.

- In the Edit Column Attributes window

change to the Attributes tab.

- Change the Initial Value to the

amount of 100ths of a second that you require between logfile

processing.

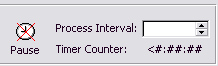

- You'll probably want to prevent users from disabling the Logfile

processing. To do this, hide or disable the ?RepGLO:PauseTimer control

on the ReplicationControlWindow procedure.

You may also want to prevent your users from altering the process

interval at runtime. In this case, delete the

?RepGLO:TimeBetweenTransactions spin box (the Process Interval).

Note: You must delete these controls

without deleting the Control Template!!

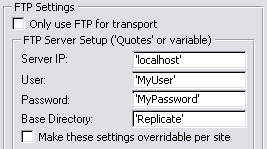

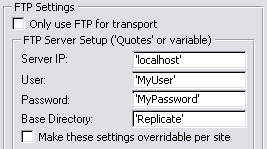

- You need to fill in the details for your FTP Server in the fields

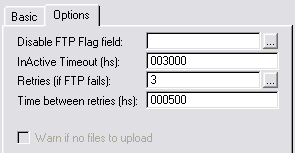

provided in the FTP Server Setup group. I

would suggest using constants. If you need to use variables, then set

it up initially with constants and get a test setup working, and you

can then move on to using variables. Constants must be encased in

single quotes.

- If all your sites will be using FTP, then you need to check the Only use FTP for transport checkbox.

- Go to the LogManager tab on your LogManager's Replicate Global

Extension template and clear the Make settings

overridable per site checkbox if you will be using only one

FTP site. Otherwise you can check this checkbox and you will be able

to override the sites that don't use the default FTPServer.

- If you checked the Make settings overridable per

site checkbox (i.e. you will be using more than one FTP

server) then you need to enter the SiteFile's field names that contain

the FTP override details in the SiteFile tab on your Replicate Global

Extension template.

BTW - because (when using FTP) there is a default setup, the LogManager

will assume that all your sites will use FTP. If they don't use FTP, then

check the NoFTP checkbox in the SiteFile to ensure that that particular

site doesn't attempt to connect to the FTP server.

For more detail, check out the section on

Some

more

explanation on the built-in functionality of the default LogManager

JumpStart

tutorial to get 2 sites Replicating

First a simple

explanation of the Site File

The Site file basically contains all the information on how the sites

relate to each other - or more accurately how a site relates to it's

relating sites. Each record in the site file represents a relationship

with another site (there's an exception - but we'll get to that just now).

Each record contains the last logfile received from that site (and the

size if it was incomplete), the last logfile that was sent to that site,

and

where the LogManager will place the logfiles that

are sent to that site (be it an FTP directory, an email address,

or a directory to copy the files into directly).

NB: Each site will also

have it's own record in the SiteTable (where ThisSite = our site =

RelatingSite). This is to store logfile counters and some other stuff.

You must have this record or else Replication will not work.

Examples

If you have C5.5F or earlier, then you MUST read

the FAQ:Compiler

Errors section.

There are a number of examples in your

\Clarionx\3rdParty\Examples\Replicatedirectory.

These demonstrate the use of the 3 different Replicate classes in a

single-EXE and multi-DLL environment as well.

| SubFolder |

Application |

Based on Class |

Simple Explanation |

| demo |

demo.app |

csLog |

This typifies your single ABC application. It incorporates the

ControlCenterServer to start and stop the LogManager when it runs

(requires NetTalk). If you're using this app, then compile the

LM.app first (without running) - then compile and run this

application and the LM will run and close automatically with the

demo application. |

| demo |

demoNoNetTalk.app |

csLog |

This typifies your single ABC application, but without

the the ControlCenterServer to start and stop the LogManager when

it runs. |

| demo |

LM.app |

csLogConnectionManager |

This typifies the LogManager based on the ABC classes, using

NetTalk to handle FTP and Email transportation. Incorporates the

ControlCenterClient and WinEvent to minimize to the TaskTray

(requires WinEvent and NetTalk). |

| demo |

LMNoWE.app |

csLogConnectionManager |

Exactly the same as LM.app, but without the WinEvent

functionality. |

| demo |

LMdirect.app |

csLogManager |

This typifies the LogManager based on the ABC classes without

external transportation (limited to LANs). This app does not

contain NetTalk. |

| demo |

LMservice.app |

csLogConnectionManager |

This typifies the LogManager based on the ABC classes as a

service using SelfService. |

| demo |

SiteSetup.app |

csLog |

This is basically just a browse of the site file to be used with

the LMservice.app (so you can setup the outging details.) |

| MultiDLL |

root.app (DataDll)

Function.app (FunctionDLL)

Mainexe.app (the EXE)

LM.app (the LogManager) |

csLogManager |

This typifies your Multi-DLL ABC application, where the

LogManager uses the same data-DLL as the main exe. |

| ControlCenter |

lm.app

server.app |

csLogConnectionManager |

These 2 apps depict a typical scenario where all your

LogManagers are control centrally by one ControlCenter (the

server.app) |

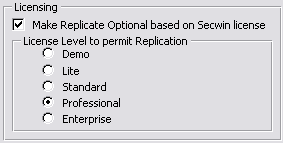

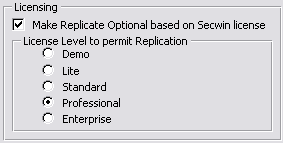

| Optional |

demo.app |

csLog |

This app depict a typical scenario where Replicate is activated

depending on the level of the Secwin licence (requires Secwin). |

demo.app, multidll (root.app, function.app and mainexe.app) typify

your existing application(s). The LogManager

programs (LM.app and LMdirect.app) provide examples of the LogManager

which you will need to create to manage the log files.

Let's have a look at 3 scenarios and what we would expect to see in

each scenario (in the SiteFile).

Note: These scenarios are a continuation

from one to the next. Please complete one through three, and don't jump

directly to the method of your choice, as this will lead to incoherent

results.

Scenario 1: 2 sites

relating to each other using Direct transport (i.e. file copy) - with no

site limitations

This scenario will work straight out the box with no changes to the

examples.

We have 2 sites: D000 which is the Primary Site, and D100 which is the

primary site's mirrored site (so there is no site limit). The crucial

thing is that D000 knows (and points correctly to) where D100s incoming

directory is (and visa-versa). The incoming directory is (by default) a

sub-directory in the logpath of the other site. So if D100's logpath is:

D:\c55\3rdparty\Examples\Replicate\demo\D100\log

then it's incoming directory will be:

D:\c55\3rdparty\Examples\Replicate\demo\D100\log\Incoming

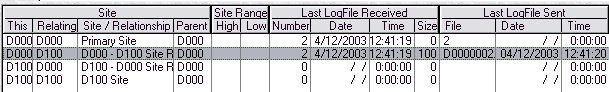

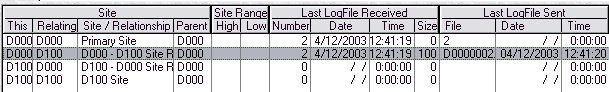

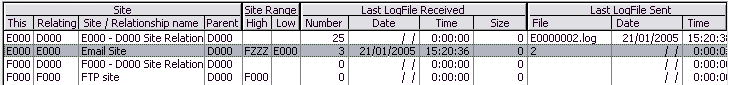

A snap shot of what the SiteFile should look like (in this case the Site

file looks the same in both places - except for the Last LogFile counters

- because there is not SiteRange limit on either site):

- The first record shows the Primary Site's details - the ParentSite,

the SiteRange, the currently used logfile (which is stored in the Last

LogFile Received Number) and the last logfile exported (which is a

number stored in the Last LogFile Sent File).

- The 3rd and 4th records are superfluous to the Primary Site - so we

won't concern ourselves with those at present.

- The record highlighted (the 2nd record where ThisSite = D000 and

RelatingSite = D100) shows the details that the Primary Site (D000)

will use to synchronize with D100 site. It shows:

- The Site Range (which would be used to

filter out the records not required for that site if there was a range

filter). In this case there is no filter as it is a mirror-site, so

this is set to blank.

- The Last LogFile Received counters, which

indicates the last logfile that was received from D100 and was

successfully imported into site D000. (more

details)

- The Last LogFile Sent counters indicate

the file that was last sent to site D100 (and the time and date it was

sent).

- The Direct Incoming Directory field

indicates the directory into which logfiles from D000 must be placed

to be successfully imported by D100. This field must match the

Incoming Directory in the About screen at D100.

That's half the job done. We should be successfully replicating from D000

to D100. The next step is to get D100 replicating to D000, which is

basically a repeat of the above, except doing it at D100 with correct

details for relating to D000 (record 3 is the crucial record to get

correct at site D100).

How to get started with the examples (Tutorial 1):

For those who own NetTalk:

- In the examples, compile the logmanager LM.app in the

clarionx\3rdparty\examples\Replicate\demo directory to run the D000

site. Do not run the LogManager at this stage. If you don't own

WinEvent, then use the LMNoWE.app.

- Compile the demo.app.

- Run the Demo.exe application - the LogManager will start

automatically with the demo application.

- In the clarionx\3rdparty\examples\Replicate\demo\D100 run the

demo.exe.

For those who

do not own NetTalk:

- In the examples, compile and run the logmanager LMDirect.app in the

clarionx\3rdparty\examples\Replicate\demo directory to run the D000

site.

- Compile and run the demoNoNetTalk.app (your LogManager will not

automatically run and close down with your application).

- In the clarionx\3rdparty\examples\Replicate\demo\D100 run the

lmdirect.exe and the demo.exe.

You will now be able to see 2 sites replicating to each other directly

with both sites running on the same machine.

Scenario 2: 2 sites

relating to each other using FTP transport - site limits

FTP can be both the simplest and most complex transport method to setup,

depending on the method you choose. If you are only going to use FTP for

transport using a single FTP server, then you have almost no setup to do

between 2 sites. The setup is all done in the template. In this example

we'll set the LogManager tab (in the Replicate Global Extension Template)

as follows:

Note:

Note: In the above scenario, you need to

setup an FTP server locally, i.e.'localhost' (if you're using XP/Vista -

then this is quite easily done in IIS), with a User 'MyUser' and a

password 'MyPassword'. You also need to create the base directory

'Replicate'. You don't need to worry about creating any other directories

in your FTP setup - Replicate will do the rest. You can use your NetDemo

that ships with NetTalk to check that you can connect to your server and

that your base directory has been created (or Explorer to show the

directories).

Continuing with site D000, which is the Primary Site (as discussed in

Scenario 1 above), we introduce a new site F000 which is a child of the

Primary Site with a site limit. Let's first look at what the setup will

lookup once everything is setup, and then we'll go through how to set it

up.

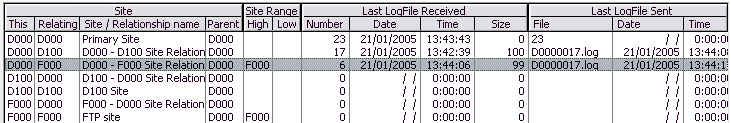

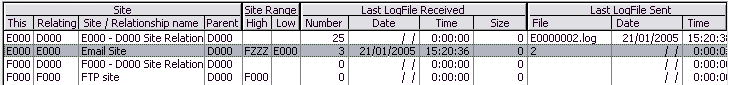

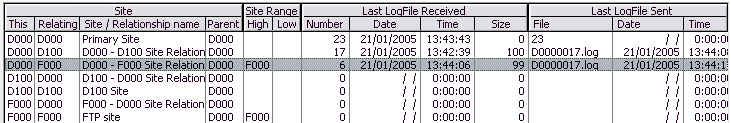

A snap shot of what the SiteFile will look like at the D000 site:

- The first record shows D000 Site's details - the ParentSite, the

SiteRange, the currently used logfile (which is stored in the Last

LogFile Received Number), the last logfile exported (which is a number

stored in the Last LogFile Sent File) and the FTP incoming directory.

- The 4th-7th records are superfluous to site D000 - so we won't

concern ourselves with those at present (records 6 and 7 are the only

2 fields in this case that will be visible at F000 - the others are

filtered out because of not being in the same site range).

- The record highlighted (the 3nd record where ThisSite = D000 and

RelatingSite = F000) shows the details that Site D000 will use to

synchronize with F000 site. It shows:

- The Site Range (which would be used to

filter out the records not required for that site). In this case it

will only send site-related records with the F000 stamp (and filter

out everything else).

- The Last LogFile Received counters, which

indicates the last logfile that was received from F000 and was

successfully imported into our site. ( more

details)

- The Last LogFile Sent counters indicate

the file that was last sent to site F000 (and the time and date it was

sent).

- The Direct Incoming Directory field and the Email Address fields are

clear - so that files are copied to the FTP Directory. If the Direct

Incoming Directory is not clear, then the LogManager will copy

directly and ignore the other (Email and FTP) settings for the

relating site.

Now we know what the settings should look like, lets go through a quick

tutorial in how to get there.

How to get started with the examples (Tutorial 2):

- In the examples, select the LM.app, edit the FTP Server setup in the

Replicate Global Extension template (if necessary). Fill in constants

for your server, user, password and base directory details (Note:

Replicate will create a sub-directory for each site in the base

directory in which to place logfiles for that site). Run it in your clarionx\3rdparty\Examples\Replicate\demo

directory to run the D000 site.

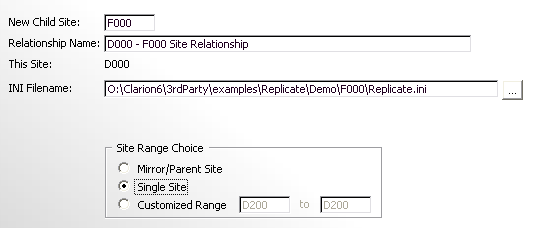

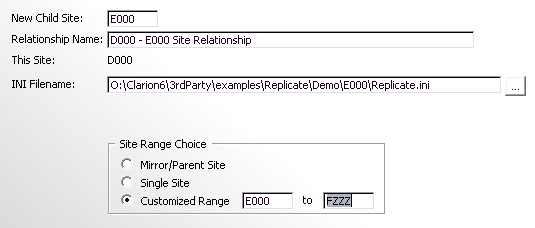

- To create the relating site, click the Create

Child button and a Wizard will appear enabling you to setup

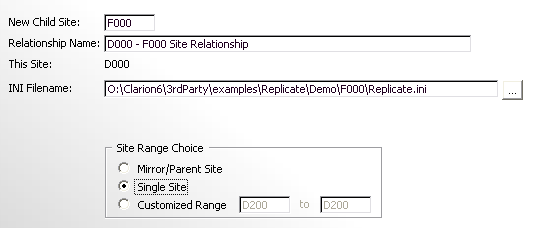

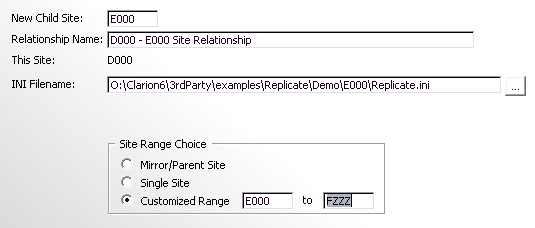

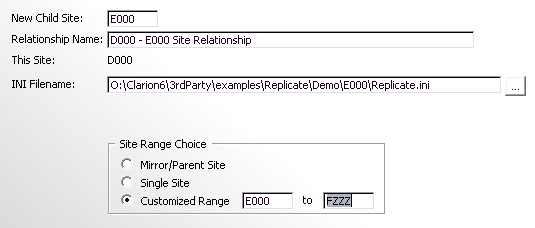

the relationship between the 2 sites. Enter the following:

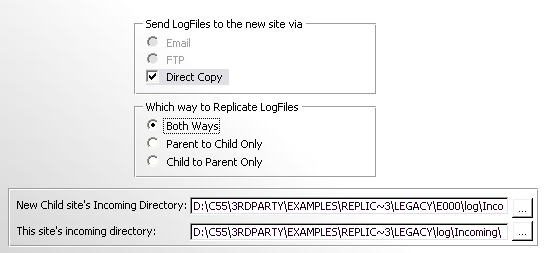

Click the Next button and check the Use FTP

checkbox.

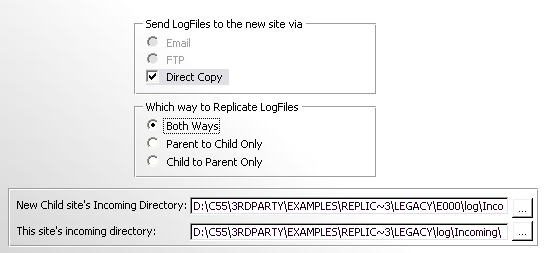

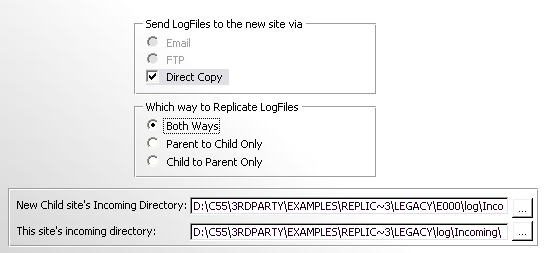

Click the Next button and choose the FTP

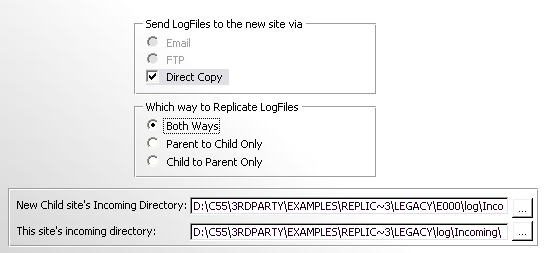

option in the Send LogFiles to the new site via

group. Leave the Direct Only checkbox

clear.

Click the Finish Button.

- Using NetDemo, check that the LogManager has created a directory

called F000 in your Replicate directory on your FTP server, and that

there are 2 files in there: D000data.z and D000msg.z.

- Run another instance of the logmanager in the clarionx\3rdparty\Examples\Replicate\demo\F000

directory to run the F000 site. Click the Process LogFiles

button - and the site will configure itself to the parent site

automatically.

Scenario 3: 2 sites relating to each other using a combination of Email

and direct transport - a limited site with an individual backup

Continuing with our site-setup, let's introduce a forth site: E000. We'll

concern ourselves with 2 sites: D000 which is the Primary Site, and E000

which is a child of the Primary Site with a site limit that includes it's

data and the data from the FTP site in Scenario 2 (i.e. F000). We'll set

this up so that it's like a roving laptop - which is sometimes connected

to the network (i.e. so logfiles can be copied directly) and others

connects remotely (via email). The crucial thing is that D000 knows the

Email Address that E000 will look for incoming logfiles, and also that the

Incoming Directory is correct (see Scenario 1 for more details on the

Direct method).

A snap shot of what the SiteFile should look like at the E000 site (which

will filter out all the records for the D000 and D100 sites):

- The 2nd record shows E000 Site's details - the ParentSite, the

SiteRange, the currently used logfile (which is stored in the Last

LogFile Received Number), the last logfile exported (which is a number

stored in the Last LogFile Sent File), the Direct Incoming Directory

and the Email Address.

- The 3rd and 4th records are superfluous to site E000 - so we won't

concern ourselves with those at present.

- The first record where ThisSite = E000 and RelatingSite = D000 shows

the details that Site E000 will use to synchronise with D000 site. It

shows:

- The Site Range (which would be used to

filter out the records not required for that site).

- The Last LogFile Received counters, which

indicates the last logfile that was received from D000 and was

successfully imported into our site. (more

details)

- The Last LogFile Sent counters indicate

the file that was last sent to site D000 (and the time and date it was

sent).

- The Direct Incoming Directory field and

is set to point to the incoming directory of the other site. If the

directory is present (at the time of synchronisation, then it will

copy directly, otherwise it Email the logfiles to the address

specified). See the About screen (at D000) for the Incoming Directory

to make sure this is correct.

- The Email Address field is set to match

the Email Address at D000 site. See the About screen (at D000) for the

Incoming mailbox to set this field to.

OK - now we know what we should be expecting, let's go through how to set

it up.

How to get started with the examples (Tutorial 3):

- In the examples, run the logmanager (LM.app) and in the clarionx\3rdparty\Examples\Replicate\demo

directory to run the D000 site.

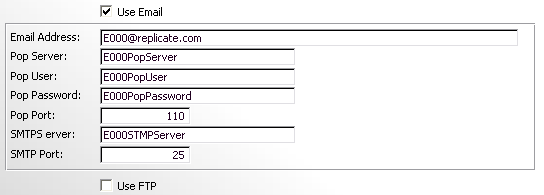

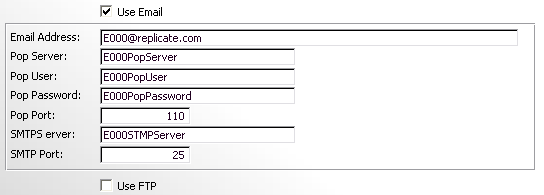

- Click the Change Settings item in the Program menu and click next a

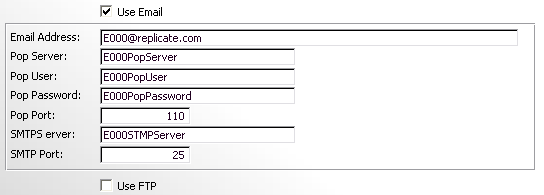

couple of times until you get to the Email Settings tab. Enter the

Email settings for this site, and click next and finish.

- To create the relating site, click the Create

Child button and aWizard will appear enabling you to setup

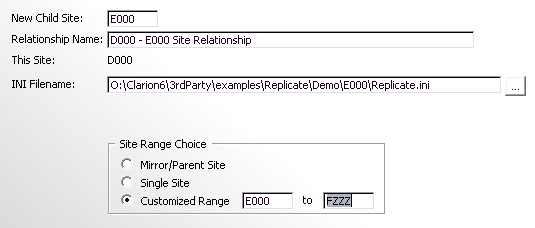

the relationship between the 2 sites. Enter the following:

Click the Next button and enter the following settings (you will need

to enter the relevant server and user details in the fields provided):

Click the Next button and enter the following settings:

By selecting both the Direct and the Email methods, we'll let the

LogManager decide on the most optimum depending on whether direct is

possible or not.

Click the Finish Button.

- Run another instance of the logmanager in the clarionx\3rdparty\Examples\Replicate\demo\E000

directory to run the E000 site. Click the Process LogFiles button -

and the site will configure itself to the parent site automatically.

Some more explanation on the built-in functionality of the default

LogManager

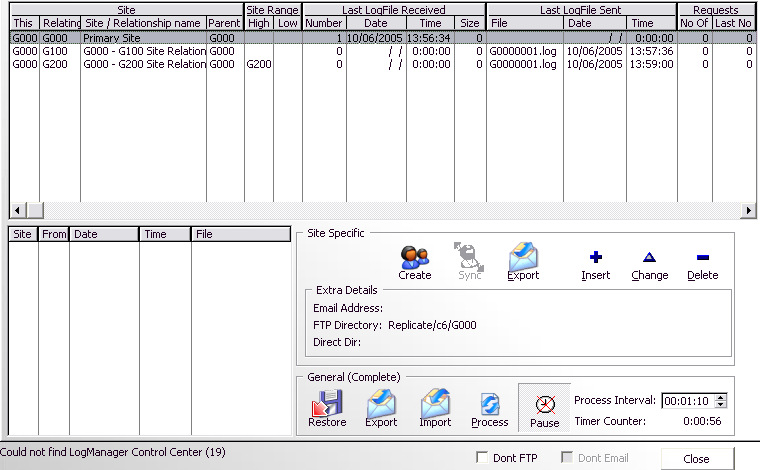

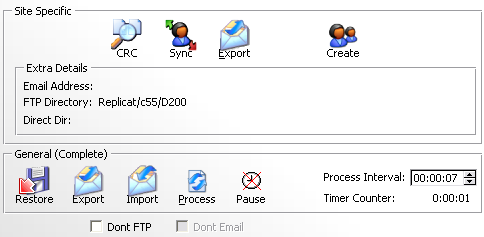

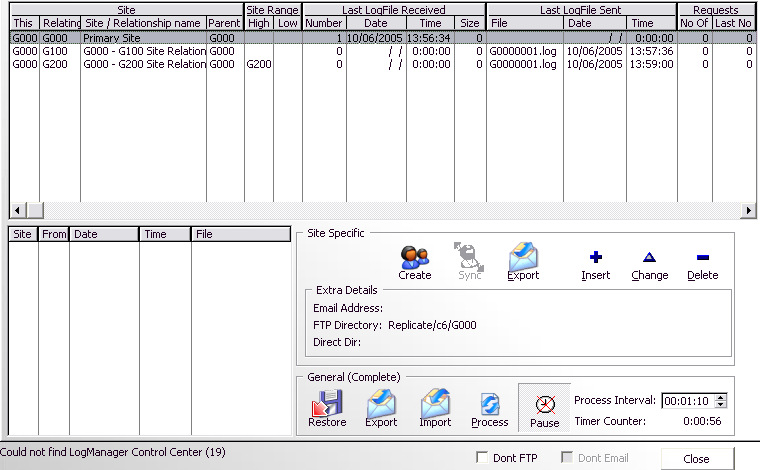

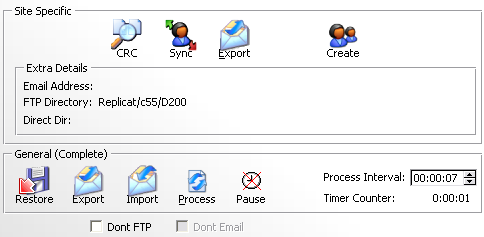

- The BrowseSites window (The Replication Control Window):

- The top Browse shows a list of sites. In this case, the SiteFile

is not replicated, so only the records pertaining to this site

(G000) will be displayed here. There are 3 records - the

highlighted record shows the settings that this site will use to

determine it's settings (FTP, LogFile to write to, Site Range,

etc.), the other 2 records determine how G000 relates to those

other sites. Let's look at each column:

This(Site) - shows the records that pertain to this site. If you

are replicating your site file, then there will be other site

records in here that don't pertain to our site.

Relating(Site) - shows the relating site.

Parent(Site) - shows who the parent of that site is. In this case

this is the primary site, so all 3 sites will have this site as

the parent.

Site Range - this is the range of records to be sent from this

site to the relating site (or the range of site-related data that

this site will import). If the both the High and Low Range fields

are blank, then all site-related data is sent\received to

that\those particular sites. If the Low Range is blank, then the

Relating Site value (i.e. the site ID of that site) is used as the

low limiter.

Last LogFile Received - this group contains information showing

the last logfile received. The number is the last complete logfile

received from that site. If the Last logfile received was a

complete logfile, then the size will be 0. For example: if the

last logfile received was logfile No3, but it did not have an

EndOfFile stamp on it, then the Number will be 2 and the Size will

be the size of logfile No3 that was imported.

Last LogFile Sent - the details of the last logfile successfully

sent to a relating site.

Requests - if this site receives a logfile that is not the next

logfile, it will request the missing\incomplete logfile from the

relating logfile. This shows how many requests we've posted to

that relating site, and what was the last logfile requested.

- The bottom browse shows a history for the highlighted site of

files this site has imported from that particular site.

- The Site Specific group of controls - pertain to a single

highlighted site in the browse.

Create - creates a relating child site.

Sync - synchronize this site's data with a relating site's data

(site ranges to site-related data are applied to the sync).

Export - export a set of data to the relating site (similar to a

sync, but is only one-way).

Extra Details - this shows the method(s) of transportation to that

site. Email Address shows the address that the logfiles will be

sent from this site to the relating site (for the relating site to

import). Similarly FTP, and direct dir apply to FTP transport and

direct copy respectively. If there is more than one possible

option of sending logfiles, then the following priority is used:

1. Direct Copy, 2. Email Address, 3. FTP Directory. This means

that the transport mechanism can easily be selected on the fly

automatically.

- The General (Complete) group of controls are commands that are

not site related.

Restore - is used to restore data from this sites logfiles.

Export - used to export a complete data set from this site to a

selected logfile.

Import - used to manually import a complete data set from a

selected logfile.

Process - issues a ProcessLogFiles, which receives and imports

incoming logfiles, as well as exports and sends outgoing logfiles.

Pause - allows the user to pause the timer (and thus disallow

Process to occur automatically).

Process Interval - allows you to set the interval between

Processes.

TimerCounter - shows the amount of time which will lapse before

the next Process is automatically issued.

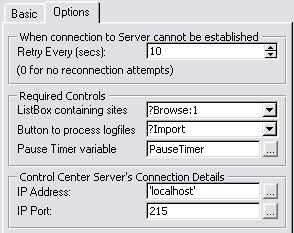

- If you are using the ControlCenterClient, then the string below

the bottom browse indicates the status of the connection to the

ControlCenterServer. If the client failed to connect to the

server, then the number in brackets indicates the amount of times

the client has attempted connecting to the server.

- DontFTP\Dont Email - this temporarily globally disables

FTP\Email at this site. This is useful if there is in case there

is a temporary problem with one of these transport mechanisms -

and the LogManager must avoid trying to use until these checkboxes

are unchecked.

- The Create Child site window is based on the Replicate_CreateChild

control

template, which is explained in the Templates section of this

doc.

- In the unlikely event of having to change a LogManager's settings,

you can select the Program | Change Settings window from the main

menu. This is similar to the Create Child Screens, although these

settings will pertain to our own site. You can set the SiteID, log

Path, Parent Site, as well as the Site Range Type (as discussed

above). You can also set the FTP and Email settings (if required). If

you make changes to these settings, you will need to exit and re-run

your LogManager in order for these changes to take effect. Be careful

when changing these settings as these will not be changed at relating

sites automatically. If you change any of these details, they must

also be modified at all the relating sites.

The Rules

These are the Rules. If you

obey them you'll be OK, if you break them you're on your own.

If you're new to Replicate then read the

Help - I'm

a new user, what must I read first? section of this doc first.

- Your Site fields MUST be STRING(4).

- Each table in your dictionary that is replicated MUST have a GUID

STRING(16) field. Do not touch this field - this is a field for

Replicate to use. DO NOT CHANGE THIS FIELD!!

- You MUST have a site table in your dictionary.

- At each site, your Site table MUST have a record for each site that

it relates to as well as a record for

itself. These records all have ThisSite = OurSite. Note you may have

more records replicated from other sites in your Site table - but

these are not used by ThisSite.

- You MUST run one and only one LogManager per site.

- You cannot have one table declaration for multiple files (i.e.

switch filenames on the fly).

- Do not delete (or drop) your site table at an existing site when

upgrading your application/LogManager.

- DO NOT be tempted to skim read the docs

and rush into implementing it into your application!!!

- Read the Some things NOT to

do in the Useful Tips section.

Useful Tips

(This is a really handy

section, full of useful bits of info - highly suggested reading)

- General Replicate Tips

- Things to do in/with the LogManager

- Some useful tips for your application:

Note: You must re-compile your LogManager when

making changes to your dictionary.

1. General Replicate Tips

Some things NOT to do

- DO NOT make file changes to

your files other than through your program, or another Replicate

enabled program. This means that you cannot do file maintenance via

the dictionary browser and other manual editing tools.

- DO NOT copy a clients data onto your laptop, make changes, and copy

back the changed database over existing data. You must either:

- make your laptop a client site and import the changes as you

would in the normal site import manner.

- OR -

- export the client's data and import it into your laptop, make

changes, and then import the logfile of the changes you have made

to the clients database.

- DO NOT make your Outgoing directory the same as the Incoming

Directory (SIT:DirectInDir) of another site.

- For Clarion6 and above you must check the Open

In Current Thread option for each file in the dictionary that

you want to be replicated.

- DO NOT make your log directory the same as the Outgoing Directory or

the Incoming directory.

- Do not use PUT(View) in Clarion5.5. This won't get logged (a

Clarion5.5 limitation). The Process template uses PUT(View). Also do

not use DELETE(View) as this will also not get logged (this may be

fixed by SV at some stage but at the time of writing this is still not

a possibility).

Recommended: Using a different Hard-drive for the logfiles. Your

logfiles provide an easily restorable back-up, so if your hard-drive

(where the data is stored), goes faulty, it will be a simple matter of

restoring from the backup. If this is not possible, then you can

create a dummy mirror-site. This means that your logfiles will be

copied to another location, where they can be stored until a backup is

required.

What you need

to distribute to your users:

- Your csLog enabled applications (and everything that you would

normally distribute that goes with these applications)

- Your csLogManager application.

- The zlib.dll (found in your Clarionx\3rdparty\bin directory) - which

should be installed into the same directory as the LogManager.

- Any pre-set site information contained in the INI settings file (set

in your Global Extension

template) and (depending on settings) the SiteFile. This could

include the Site, logpath, FTP settings, site range, etc.

- If you are using NetTalk as a transport engine, then you need to

distribute the NetTalk dll (check the NetTalk docs for more details).

Replicate and SQL

Replicate supports SQL with a few limitations:

- You cannot use prop:SQL to perform write operations on the database,

as these will not be visible to the Replicate methods (with the

exception of using Triggers and StoredProcedures - with the caveat

mentioned below)

- You cannot use the SQL engine to do relational integrity

maintenance, as these are also not in view of the Replicate methods

(unless you are only replicating between SQL databases of the same

database type that have the same RI rules in place). For example: when

the relationships in the dictionary are used to maintain the

relational integrity, the updates/deletes are all down through the

file driver engine (one transaction at a time). Each of these

transactions are logged, and when an import is done, the relational

integrity is maintained.

- There was a bug in the Clarion MSSQL driver (fixed in 9057), where

the GET did not pass through the CALLBACK. This means that there was

no way to determine what changed in a file record when the next PUT

was done. This means that the entire contents of the record needed to

be stored, which makes for larger logfiles - and also record level

replication as apposed to field-level replication. If you cannot

upgrade to 9057 or later, then you need to be aware of this

deficiency.

NOTE: If you are using 9057 or up, then

you need to check the 'I'm using Clarion6 9057 or higher' checkbox on

he Options tab of the Global extension template in both your

application and the LogManager.

- You cannot use triggers (unless your triggers and stored procedures

are precisely the same in both databases) - as the file activity is

not visible in the filecallback - thus these changes cannot be logged

by replicate.

Implementing Translation

There are only a few things that need to be translated in Replicate. There

are the

error and warning messages, and a few internal windows (like the

importing and exporting progress windows - although these are generally

hidden). The translation is supported by means of an INI - type file that

you can edit using a text editor like NotePad. For example:

[RepMessage]

Heading=Replicate Warning

Unknown Message.=Unknown Message.

Now|Never|Next Time=Now|Never|Next Time

[ReplicateWindow]

Importing...=Importing...

Exporting...=Exporting...

Processing...=Processing...

There are 2 sections: The RepMessage section, which contains all the

translations required for the Messages associated with Replicate; and the

ReplicateWindow section, which contains all the translations required for

the windows associated with Replicate.

There's a method called

GenerateTranslationFile,

which you can use to generate (or add to) a translation file with all the

messages and other text that requires translation. To use this translation

file, you can set the translation file in the

Global Extension Template.

Complete

replication of Site related files throughout the Site-Tree

There may be cases where you require a Site-related file to be visible

throughout all the sites.

For example: You have several pizza parlour branches using software to

track the orders of clients. You want all the other branches to be able to

add their own clients, but also to view the others branches' clients. The

clients may not always go to the same branch and you want whichever branch

to be able to view the clients details (maybe the client file has a

favourite pizza field). You still want subsetreplication for your invoices

and orders files.

In the above scenario, you simply change the Site field name to something

slightly different (e.g. SiteID). Replicate will see this as a global

table and will replicate the file changes throughout the Site tree, but

you can still impose site limitations on the way the data is viewed.

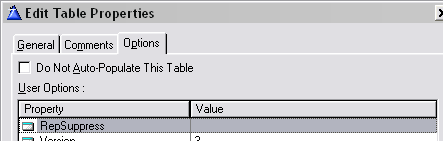

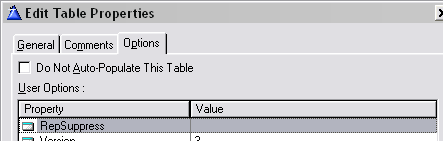

Suppressing files in the dictionary (as apposed to in each app)

Instead of filling out the list of suppressed tables in your application,

you can add a user option 'RepSuppress' in your dictionary for those files

that you wish to suppress.

- Open your dictionary, and right click on a file that you want to

suppress and click the Properties item in

the popup that appears.

- Change to the Options tab in the Edit

Table Properties window that appears.

- Enter the RepSuppress option in the User Options table as follows.

You can do this for each table that you require to suppress.

Warning: You should either use the template

list (to suppress files) or the dictionary option - not both, as this can

lead to confusion.

What to do in a

Multi-DLL setup

Case 1 (the usual case):

You don't need any of the Replicate properties or methods in any of the

applications. You LogManager will use the DataDLL.

Solution:

- Add the Replicate Global Extension into your Global DataDLL - and

setup the options as you would your LogManager for a single EXE, but

check the This is part of a Multi-DLL application,

Export Class from this DLL and Use

this DataDLL for the LogManager checkboxes.

- In your LogManager, add the Replicate Global Extension and check the

This is part of a Multi-Dll application

checkbox and go to the Class tab and select the same class that you

did in the DataDLL (in step 1).

You don't need to add the Replicate templates to any of your other

applications.

Case 2:

You need some of the Replicate properties or methods in some of your

applications. You LogManager will use the DataDLL.

Perform the steps as in Case 1, but add the Replicate Global Extension

template to those DLL (and EXE) applications where you require the use of

the Replicate properties and/or methods. In each of these instances, check

the This is part of a Multi-DLL application and select the class that you

selected in the DataDLL.

Case 3:

Your LogManager is completely separate from your Multi-DLL application

(stand-alone, or with it's own DLL set).

Solution:

- Add the Replicate Global Extension into your DataDLL and setup the

options as you would for your log enabled application. Check the

following checkboxes: This is part of a Multi-DLL

application and Export Class from this

DLL but leave the Use this DataDLL for

the LogManager checkbox unchecked.

- Setup your LogManager as laid out in the docs above for a StandAlone

LogManager.

Converting an existing application with independent sites to a

Replication setup

- The first step is to add FM3 and ship your application.

- The next step is to clean your dictionary up. You need to try and

get rid of Autonumbered keys that shouldn't be there. Let's take the

Bizrules app (shipped in Clarion6) that has your standard customers,

orders, lines, items (or products) (and rules) type database. Here we

have all 3 table types: Orders and items (are site related tables),

Items and Rules (are global autonumbered tables) and customers (a

global non-autonumbered table).

- The first important step is to make the customers table a

non-autonumbered table. This is because we want all our customers

to be at all sites - and we don't want to worry about duplicates

(i.e. the same customer with a different number at each site).

This means we have to change a number of things in our database:

- The CUS:SysID has to be made obsolete from the table (also the

Customer Number field) (don't delete it).

- We need to make an alternative primary key (lets use

Company\Firstname\Lastname - although in normal everyday life this

would not be sufficient to ensure uniqueness as there may be 2

people with the same names working at the same company). Otherwise

you could use the GUID field

- We need to add the Company\FirstName\LastName (or if you've used

the GUID field) relating fields to the Orders table. This is where

things start getting exciting as you need to write a routine to

make sure that on conversion the correct data gets populated into

the new field. We won't delete the CUS:SysID in these tables -

just superannuate it. Once we've added our new relating field(s)

we can run our routine and populate these new fields based on the

values picked up through the old CUS:SysID relationship.

This routine will look something like:

set(Orders)

loop until access:Orders.next()

CUS:SysID = ORD:SysID

access:Customers.fetch(CUS:SysIDKey)

ORD:CusGUID = CUS:GUID

access:Customers.update()

end

- Once we've cleaned up the table structures, we can move on to

changing the dictionary to a Replicate enabled dictionary. You need to

use BDE to add the GUIDs to each table and Site fields (FAQ

2.3. for more details) to those tables that require it.

- FM3 will auto populate your GUID fields - but they must be called

<PRE>:GUID in order for FM3 to recognize these fields as GUIDs

(where <PRE> is the label of the file prefix). Site fields

should have the SetIfNew option set to $$$$. It's a good idea to

distribute your application at this stage, otherwise you need to have

a separate application that runs FM3 on all the tables to convert the

data (which must be run prior to your application running). You can

easily do this with the Conversion Application template utility that

is included in FM3. This is to ensure that the SiteFields and GUIDs

are all created prior to Replicate initializing.

- OK - now that we've got our data all setup and Replicate ready,

you're ready to add Replicate to your application.

- You need to add the following line of code to the end

Replicate Init method ("After Generated Code"). For more detail on how

to do this, check out the Deriving

your own methods section.

self.PrimeSiteField('$$$$',Rep_CheckIfDone)

This will basically turn all the site field values that have been

primed with $$$$ to the value of the current site (this is only done

once).

- Alright, that's about it. Well, not quite, because here's the

killer. You probably have two complete sets of independant data.

You'll have a products table, a customers table, etc at each site. Now

when they start replicating, there are going to be a whole bunch of

duplicates that spring up. Best case scenario is that you can allocate

one of these sites as the master, whose database will supercede that

of the other, but more likely you have valuable data at both sites -

neither of which you'll want to through away. In this case, there's no

short cut, but to add a site field to each table, replicate the data

(and thus merge the data), and then create a program (with replicate

in it - or a routine in your application) that will merge/delete

duplicates which will need to manually be run by someone who

understands the data. Once the merger has taken place, the site field

must be removed from the tables that don't require it.

Optional:

- If each client has it's own SiteTree (i.e. maybe with 2 sites) then

it may be easiest to include an ini file in the install (with the

SiteID in it). Then the initial site can start up with all it's

details intact.

- The client can then create their own extra child sites using the

CreateChildSite wizard in the LogManager (which you can tailor to suit

your needs, like make certain fields read only). The best would be if

you've got an FTP server that they can access, then you can

programmatically set Replicate to use the FTP server, otherwise

they'll need to setup the local connection between the two sites (i.e.

the incoming directory).

Note on point 2 (if you're using the common

FTP server): You need to make sure that each INI file that gets shipped

has it's own unique SiteID, otherwise you may get 2 sites with the same

common SiteID.

Note on point 1 (if you're using the common

FTP server): Alternatively, you could randomly generate the SiteID and

then contact FTP Server (doing a directory search) to see if the SiteID is

available, and then assign one dynamically, before Replicate initializes

(or by running a separate install exe on startup).

Things to do in/with the LogManager

Restoring a Complete

database

When doing a complete data restore, you will need to run your LogManager

with the following switch:

/NoAutoSiteAdd. This

will ensure that when you first run the program, your own site is not

automatically added, so that when you do the restore, your to the new

record, because of a different own site's record will also be restored. If

you do not do this, a site record will be automatically added, and you

will be unable to restore the original site info GUID existing as the

record identifier.

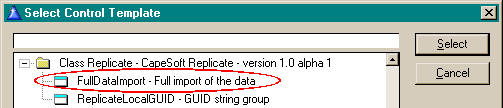

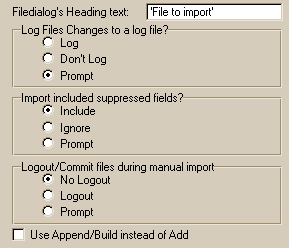

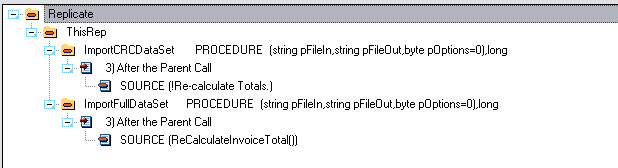

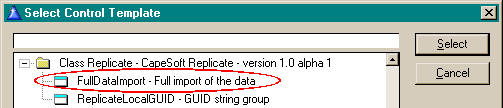

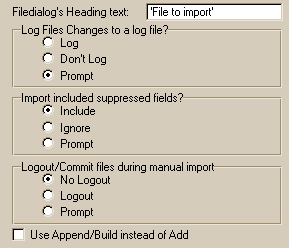

If you're using the FullDataImport Control Template to handle the import,

then before importing, you will be prompted with the following messages:

- Would you like to import suppressed fields as well?

If you have suppressed some fields in the tables that you are logging,

you can choose to import these suppressed fields (this is provided

that the suppressed fields have been written into the log file). For

example: The LogManager tracks what log files and the size of these

log files that it has imported from and exported to its relating

sites. These tracking fields, are normally suppressed. However, when

you do a complete import, it might be necessary to restore these

pointers to continue with smooth replication (as though a complete

re-import never occurred).

- Would you like to log changes implemented?

You may want to log the file changes that are made during an import

(as though these were normal changes) which you can distribute to your

relating sites. Normally you would select No as this is simply used to

get a site's data back to where it was before the wheels fell off.

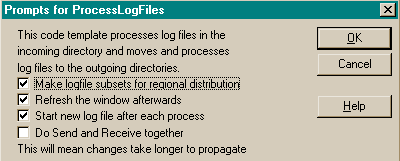

Making

more frequent (than daily) LogFiles

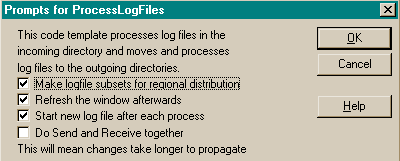

It is possible to make LogFiles more regularly in Replicate. The most

practicable solution to making more logfiles is to create a new logfile at

every transaction. You need to modify the LogManager program, although

your own programs (the log programs)

MUST be compiled with version

Beta 9 or later, or else the Replication will not work.

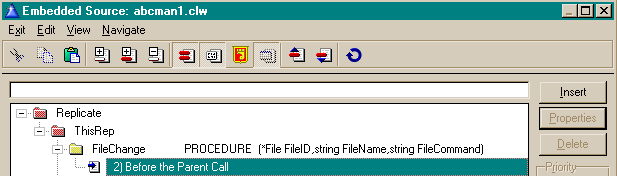

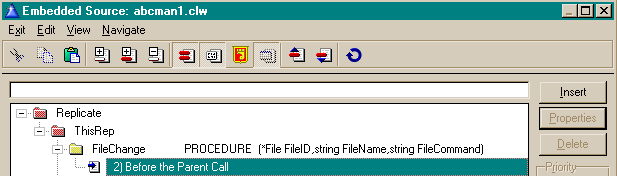

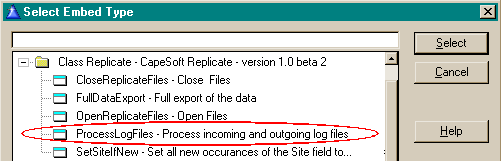

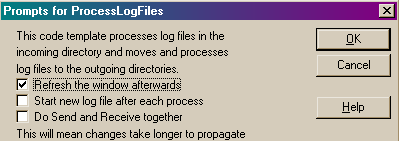

In your

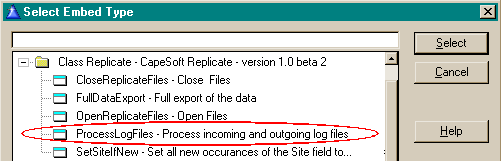

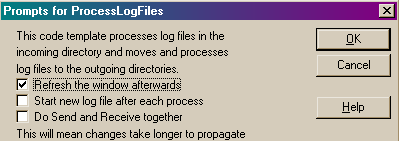

ReplicationControlWindow procedure, you

need to edit the

Process Incoming and Outgoing

logfiles Code template as follows:

Note: check the

Start

new log file after each process check box.

You also need to ensure that all of the programs (log or logmanager) have

the property

ThisRep.NoCheckEOFEverytime clear (or 0). This is

clear by default, so it will be clear unless you have manually set it.

Choosing transport on the

fly

If you have a salesman with a laptop, who sometimes is in the office and

others is connected remotely (via e-mail), then it is often useful to be

able copy the files directly to his machine when he is in the office, and

email them when he is out. It's very easy to do this, you simply set up

both methods of transport in the relationship.

At the parent, make the incoming directory (to the laptop) what it should

be, and also add the email/FTP details. Do the same at the child site.

How it works

The LogManager will test to see if the directory in the DirectInDir

is there. If it is, it will copy the logfiles (and compress and/or encrypt

if required)to this directory without emailing them. If it is not, it will

email/FTP the logfiles.

Creating a new child site

It is often useful to be able to create a childsite without your users

having to set-up the connection between them and the parent. Too much can

go wrong in the setup.

There is an example of how to do this in the LM.app and the LMdirect.app(a

procedure called AutoCreateSite).

Basically, the Create-Child wizard will :

- create an INIFile containing the settings that the child-site's

LogManager will use.

- create an entry in your sitefile describing the relationship to the

child-site.

- create a MessageFile with the information on how the child should

relate to the parent and place this MessageFile in the incoming

directory (or FTPDirectory or Mailbox) of the child site.

- Create a complete data set for this new site to import and use

(Note: this is a slow way of creating a complete data set because of

exporting and importing one record at a time. It is more advisable to

create a database export (to TPS for example) and start the new site

with a complete database, rather than importing the complete database

from a log file)

All you basically need to do is ensure that the INIFile is placed in the

correct directory (for the child site) and run the LogManager at the child

site. The child's LogManager will create an entry in the SiteFile to

maintain its settings. The first ProcessLogFiles it will find the

MessageFile in it's incoming directory (or FTPDirectory or Mailbox) and

import that - i.e. create a record in the SiteFile that describes it's

relationship to the parent.

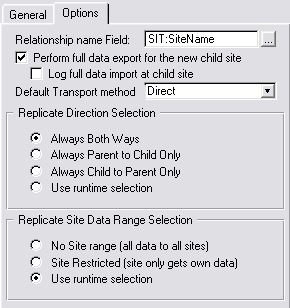

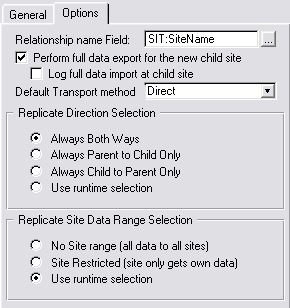

If you don't want a complete data export upon child-creation (you may have

an alternative method of setting the initial data set up) - then you can

clear the 'Perform full data export for the new child site' checkbox in

the 'Auto Create a Child Site' extension template on the AutoCreateSite

procedure.

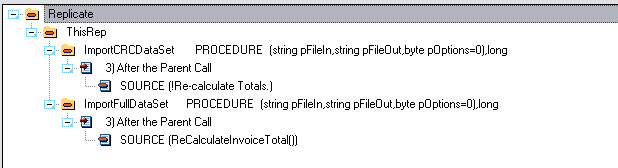

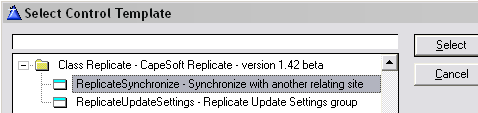

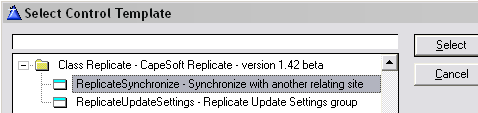

2.5. Synchronizing

with another site

There is a control template to aid you in doing this. Go to your

BrowseSite window and add the

Replicate

- Synchronize control template to the window.

You can also use the

CRC

Check control template to do an abridged (and much quicker)

comparison between two sites.

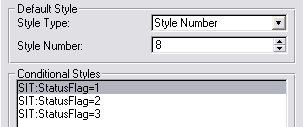

Implementing Status of Relating LogManagers

It's useful to be able to see what's happening at relating sites'

LogManagers. The status feature will give an indication of whether the

relating sites are normal operation, whether we have had to request a

missing logfile, if the logfile that we missed, isn't actually there and

requires to be remade (this will happen automatically). You can use the

Request counters to count how many times a different logfile is requested,

and thereby develop patterns for the different logmanagers in order to

track why a logfile is going missing in

transit.

To implement:

- Add 3 fields to your sitefile (this will be done automatically in

BDE for users creating the sitefile with BDE version 2.07 and up):

StatusFlag byte

NoOfFilesRequested long

LastFileNoRequested long

- Populate these 3 variables into their respective fields in the

Replicate Global Extension template in the LogManager (the Sitefile

tab).

- In your ReplicationControlWindow window, in the embed point After

Opening the Window:

?Browse:1{propstyle:backcolor,1} = color:blue

?Browse:1{propstyle:textselected,1} = color:blue

?Browse:1{propstyle:backcolor,2} = color:yellow

?Browse:1{propstyle:textselected,2} = color:yellow

?Browse:1{propstyle:backcolor,3} = color:red

?Browse:1{propstyle:textselected,3} = color:red

where ?Browse:1 is the ID of the browse control on the site table.

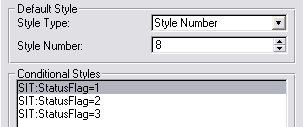

- in your window formatter (of the ReplicationControlWindow window),

check the Style option on for whichever fields you want styled with

the status ( in the List box formatter). Now go to the Actions tab of

you list properties and set the Styles customization to the following:

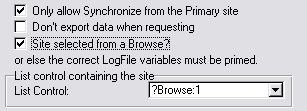

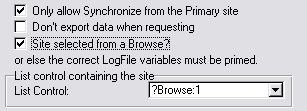

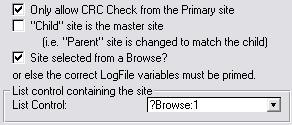

Note: The StatusFlag is set to 1

(level:notify) when a logfile is missing and is being requested from

the relating site (normally color:blue). It is set to 2

(level:warning) when the relating site cannot find the missing logfile

to send through. If a logmanager is setup to do nothing when one of

the logfiles from its own site has gone missing, then the status will

change to 3 (level:error) and the color will be set to red.

Controlling the

LogManager Externally

You must have NetTalk in order to use this feature - and you'll almost

certainly want to make your LogManager a service (which you can do easily

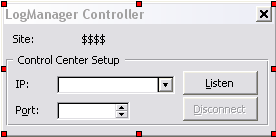

using